Stay Updated with Retell Changelog

Updated Latest Features and Big Announcements

We promise we won't send anything else.

Safety Guardrails

Your agent can now refuse what it shouldn't engage with.

→ Block jailbreaks — prompt extraction, instruction bypasses, unauthorized tool calls. Caught and killed before they reach the caller.

→ Filter harmful output — harassment, self-harm, violence, gambling, regulated advice, sexual exploitation, child safety, and more. You choose which categories are off-limits.

→ Hard stops, not soft warnings — when a guardrail fires, the agent doesn't hedge. It won't produce that content. Period.

Enable per-agent under Security & Fallback in agent settings. Available on all plans.

Global Node Return Paths

Customers can now leave a global node and come back to exactly where they were in the conversation.

→ No lost progress — a caller asks about your callback policy mid-booking, gets the answer, and picks up right where they left off. No restart, no repeated questions.

→ "Actually, never mind" just works — if a customer triggers a global node then changes their mind, the flow resumes naturally, like a real conversation would.

Enable per-node in any global node's settings.

Webhook & Functions Test Tools

Stop deploying integrations blind.

→ Webhook testing — Hit Test, get a real payload sent to your endpoint. Same structure, same data your agent sends in production. Catch auth failures, bad URLs, and schema mismatches before a single live call.

→ Custom function testing — See variable substitution, test connectivity, preview the response. No live call required.

Available for Agent Webhooks, Alert Webhooks, and Custom Functions.

Always Edges — Guaranteed Transitions

Force any conversation node to move forward, no matter what.

→ Overrides everything — an Always edge that overrides other transitions on that node. One path, guaranteed.

→ You control timing — "After User Responds" waits for input first. "Skip User Response" transitions immediately.

Add via the transition dropdown → Always/Skip, then set your transition mode. This replaces the old skip-response toggle with a more flexible model.

Other Platform Upgrades

Audio Transcription Auto-Failover — Deepgram goes down? We switch to Azure. Azure goes down? We switch to Deepgram. Mid-call. No audio dropped.

Batch Testing API — Define test cases, run them in bulk, get results back — all via API.

Branded Caller ID (Custom Telephony) — Show your company name on outbound calls and reduce spam flags. Now works with your own telephony numbers, not just Retell-managed. U.S. only. Requires business profile.

Advanced Call & Chat Filtering — Filter by custom analysis data and dynamic variables. Pin specific agent versions to pull from.

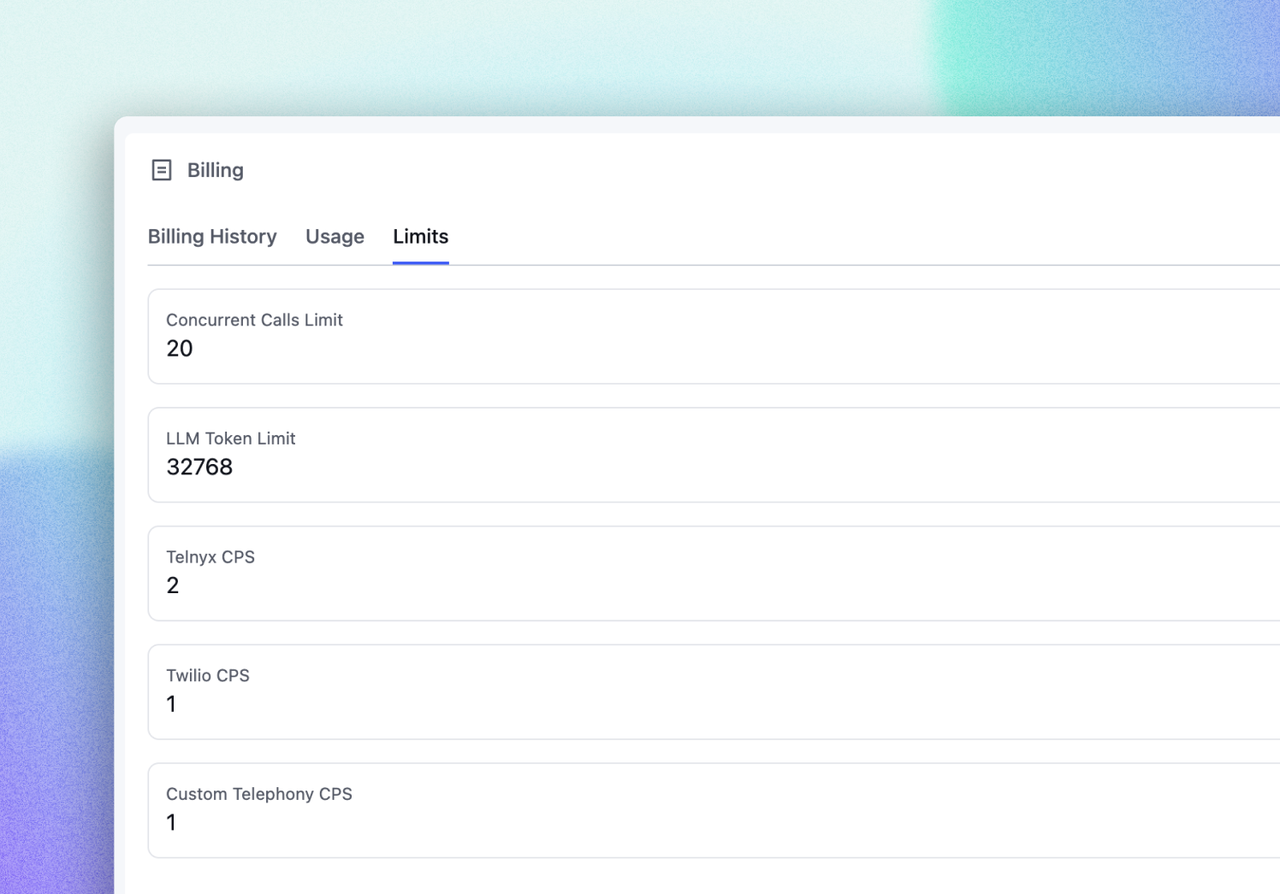

Billing Transparency Changes

TTS & Voice Engine — Now Separate Line Items

Your invoice previously bundled TTS and voice engine costs together. They're now broken out — you can see exactly what each costs across voice engines, LLMs, and telephony.

Concurrency — Billed Upfront, Prorated by Day

Concurrency is now charged when you add it, not at the end of the month. Add capacity mid-cycle and pay only for the remaining days.

Model Migrations

Seven deprecated models have been automatically replaced. If your agents used any of these, they're already running the new versions:

- claude-3.5-haiku → claude-3.5-haiku-v2

- claude-3.7-sonnet → claude-4.5-sonnet

- claude-4.0-sonnet → claude-4.5-sonnet

- gemini-2.0-flash → gemini-2.5-flash

- gemini-2.0-flash-lite → gemini-2.5-flash-lite

- gpt-4o-mini-realtime → gpt-realtime-mini

- gpt-4o-realtime → gpt-realtime

GPT-4.1 is not being deprecated on Retell. OpenAI recently removed it from ChatGPT. We've seen some of you asking about this — GPT-4.1 is staying on Retell. Your agents running it continue to work as-is.

We're thrilled to share some exciting new features and updates on the platform. Here’s what’s new:

AI QA Analyst

AI QA automatically reviews your calls and provides crucial feedback 24/7.

With AI QA, you can:

→ Surfaces patterns in your calls: Find and fix systematic issues without getting stuck on one-off bad calls.

→ Track Resolution rates across every call: Transfer rate, disconnect reasons, repeat contact patterns — by agent, call type, time period.

→ Catch what humans can't: Hallucination rate, sentiment shifts, tool accuracy — measured automatically across every call.

First 100 minutes of analysis is free.

Alerting

With Alerting you can set triggers that alert you the moment something goes wrong with your agents.

Alerting lets you:

→ Know when an agent burns $100 in minutes, success rate hits 0%, sentiment drops 20% and more.

→ Delivered via email or integrate into your existing tools via webhook

→ Smart thresholds so you only get alerted when it actually matters

It only takes 2 minutes to set up your first alert.

Conversation Flow Node Search

Search between any node in seconds using the search box or cmd/cntrl+F to review and audit large flows faster.

Other Platform Upgrades

Export Call History — Export any of your agents' entire call history with URL's.

Voice API — Add-voice, search-voice, and clone-voice APIs are now exposed through the SDK.

Custom Transcription Config - You can now choose between optimizing for accuracy or latency by changing the number of endpoints used

HubSpot Integration — Retell is now available through the HubSpot Marketplace.

Auto IVR Hangup — You now have an option to automatically hang up if your agent encounters an IVR system.

Auto transcription fallback — If Deepgram or Azure has an outage, we seamlessly switch to the other provider without dropping audio, even mid-call.

Voice Emotion Control — Cartesia Sonic-3 models now support Happy, Surprised, Sympathetic, and Calm. MiniMax voice models now support Happy, Surprised, and Calm

Concurrency Blast — Allows up to the lower of 3x your concurrency limit or 300 additional concurrent calls. $0.1/minute for burst usage.

Flex Mode for Conversation Flows

Flex Mode lets your agents navigate flexibly between nodes as customers jump between topics, have multiple requests, or change direction mid-call.

With the introduction of Flex Mode, you can:

- Control when your conversation agent switches between rigid and flexible behaviour using components

- Use flexible mode when you need natural navigation across nodes without repetition or restarts

- Use rigid mode when you need strict, error-proof sequencing

Perfect for complex support flows, multi-service requests, and any conversation where customers have multiple/varied requests in a single call.

Enable at Agent or Component level. Best for flows under 20 nodes.

AI Assisted Warm Transfers

Advanced Warm Transfer adds a live AI handoff assistant that lets your human agents engage with the AI before accepting or declining a call.

With Advanced Warm Transfer, you can:

- Let transfer targets confirm they're ready before bridging the call

- Exchange information - AI shares context, humans can ask questions

- Reject transfers that don't meet criteria (e.g., lead too small, wrong department)

- Prep your reps with full call context before they speak to the customer

The handoff assistant is fully configurable - build simple briefings or complex multi-step workflows with function calls, and more

Country Level Call Controls

Country Restrictions are now available on all numbers. — Block inbound and outbound calls to countries you don't want connecting with your agent.

Other Platform Upgrades

Agent Version Comparison — Compare any two agent versions with semantic diff. View changes side-by-side, with difference-level highlighting.

Rerun Post-Call Analysis — Modify analysis prompts and regenerate summaries across historical calls without re-running the calls.

Per-Call Agent Override — Dynamically override agent configuration at the call level for both inbound and outbound calls.

Concurrency Dashboard — Monitor active call concurrency over the past 24 hours directly in Analytics.

Custom Function Constants — Define fixed parameters in custom functions using static values or dynamic variables.

Chat Agent SDK — Full chat agent API support now available in the SDK.

Phone Number Editing — Modify custom telephony and Retell numbers directly from the dashboard.

New Models & Voices

New Models: GPT-5.2, Gemini 3 Flash, Claude 4.5 Haiku & Sonnet

New voices & voice providers:

- MiniMax (new provider) 40 languages and voice cloning

- 11Labs (new feature) professional voice imports

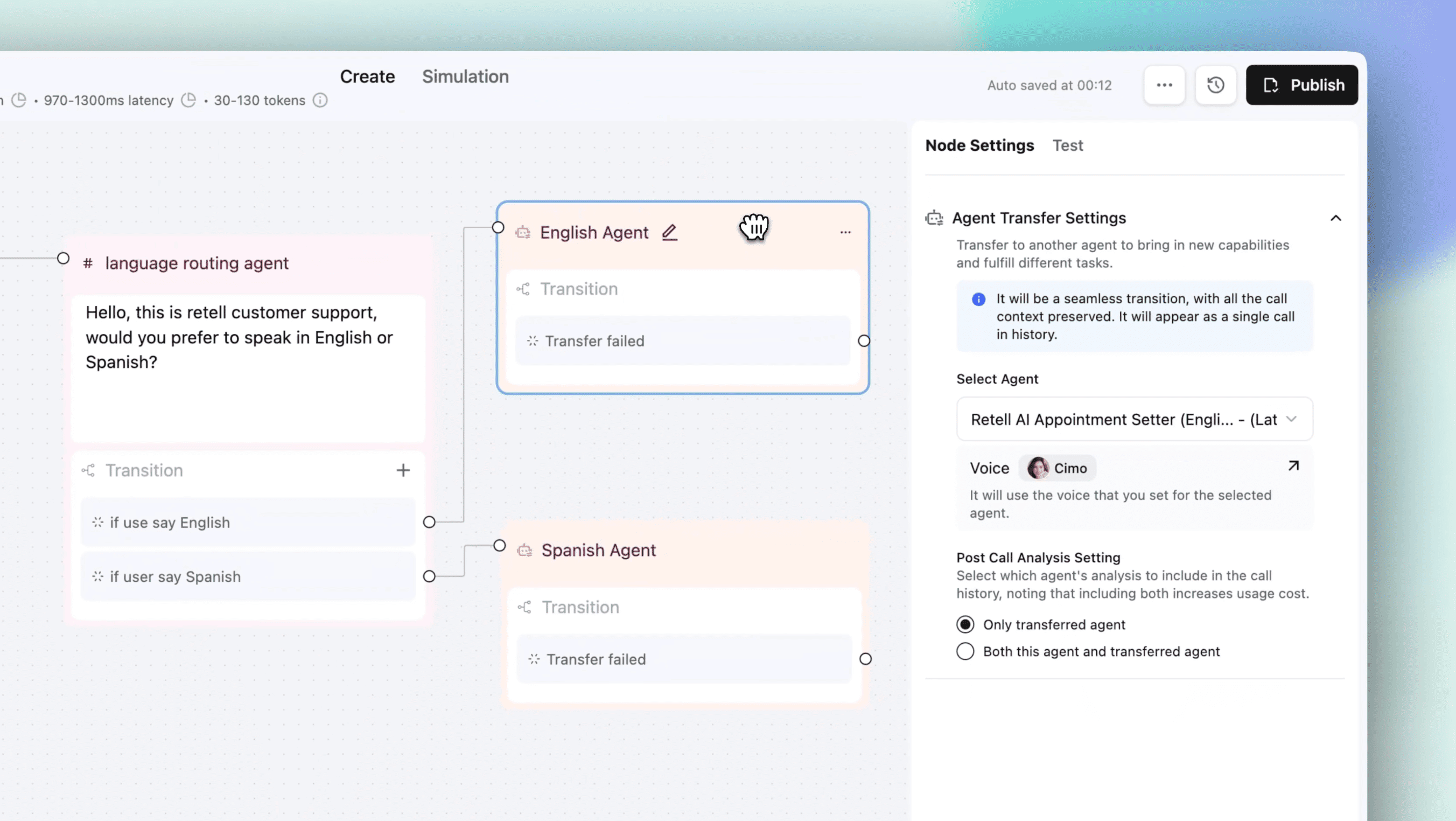

Conversation Flow Components are Live! - Reusable flows & functions across agents.

Build reusable sub-flows and functions to keep your agents maintainable and consistent across your entire account with our new Update the component once, and it syncs with all other agents that use it.

Example use cases:

- Security check — "Before I help you, can I verify your account? What's your date of birth and the last 4 digits of your phone number?" → use billing/support component

- Language specific sub-flows — "I can help you in English or Spanish. Which would you prefer?" → transfers to the right language component

- End-of-call survey — "On a scale of 1-5, how satisfied were you with this call? What could we improve?" → use a follow-up component

Get started: Agent Builder → Components Tab → + Create → Build your component flow

Join the conversation on LinkedIn

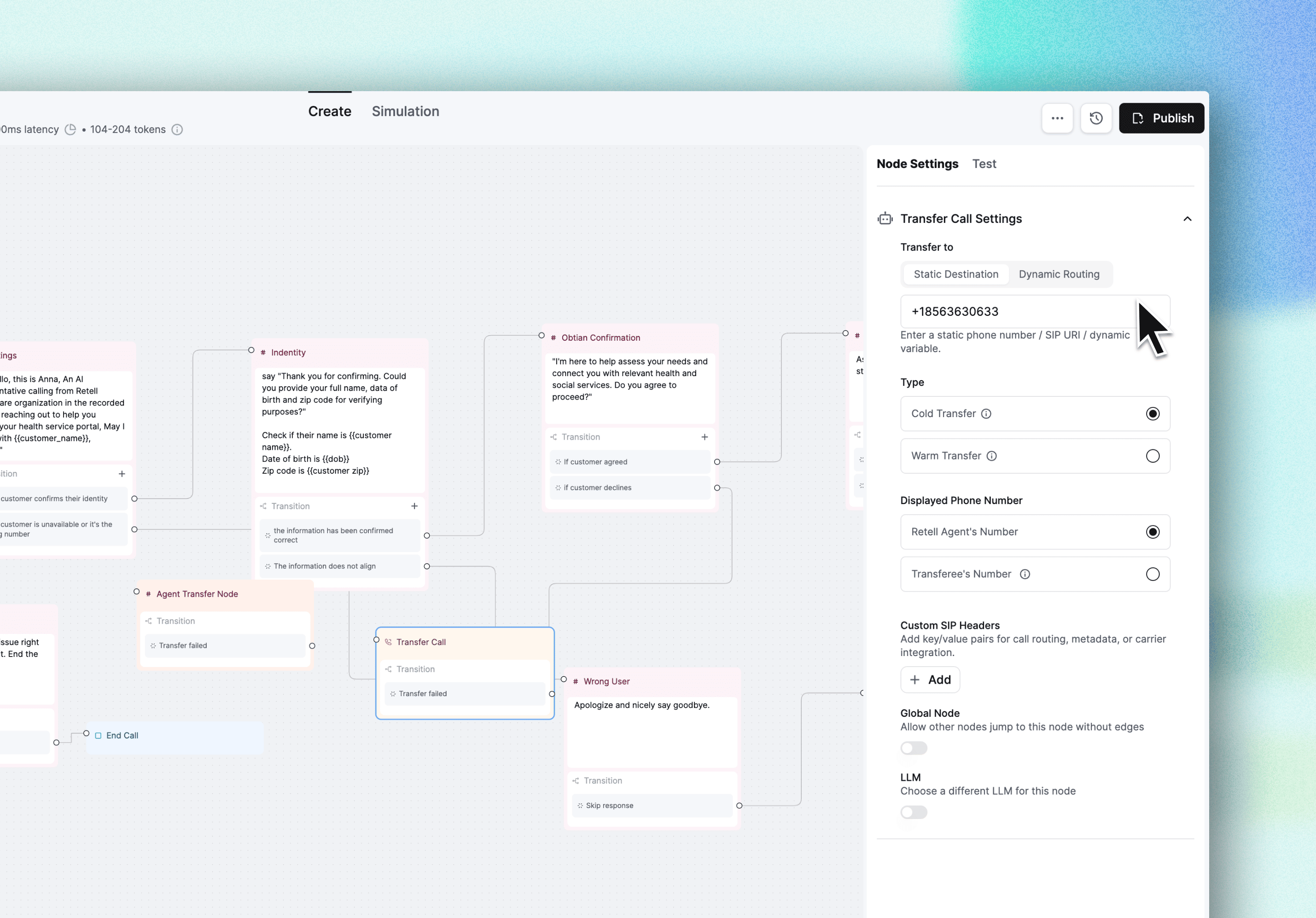

Warm Transfer Caller ID - No more "Who called? confusion

You can now control which caller ID appears when transferring calls, warm and cold — showing either your AI agent's number or the original caller's number.

Why this matters:

When your sales or support team sees the real caller's number, they can pull up CRM records instantly before picking up and call customers back if the call drops. Critical for lead qualification workflows and HIPAA-compliant healthcare operations where caller tracking is required.

Set it up: Call Transfer Node → Caller ID Settings → Select "User's Number"

Join the conversation on LinkedIn

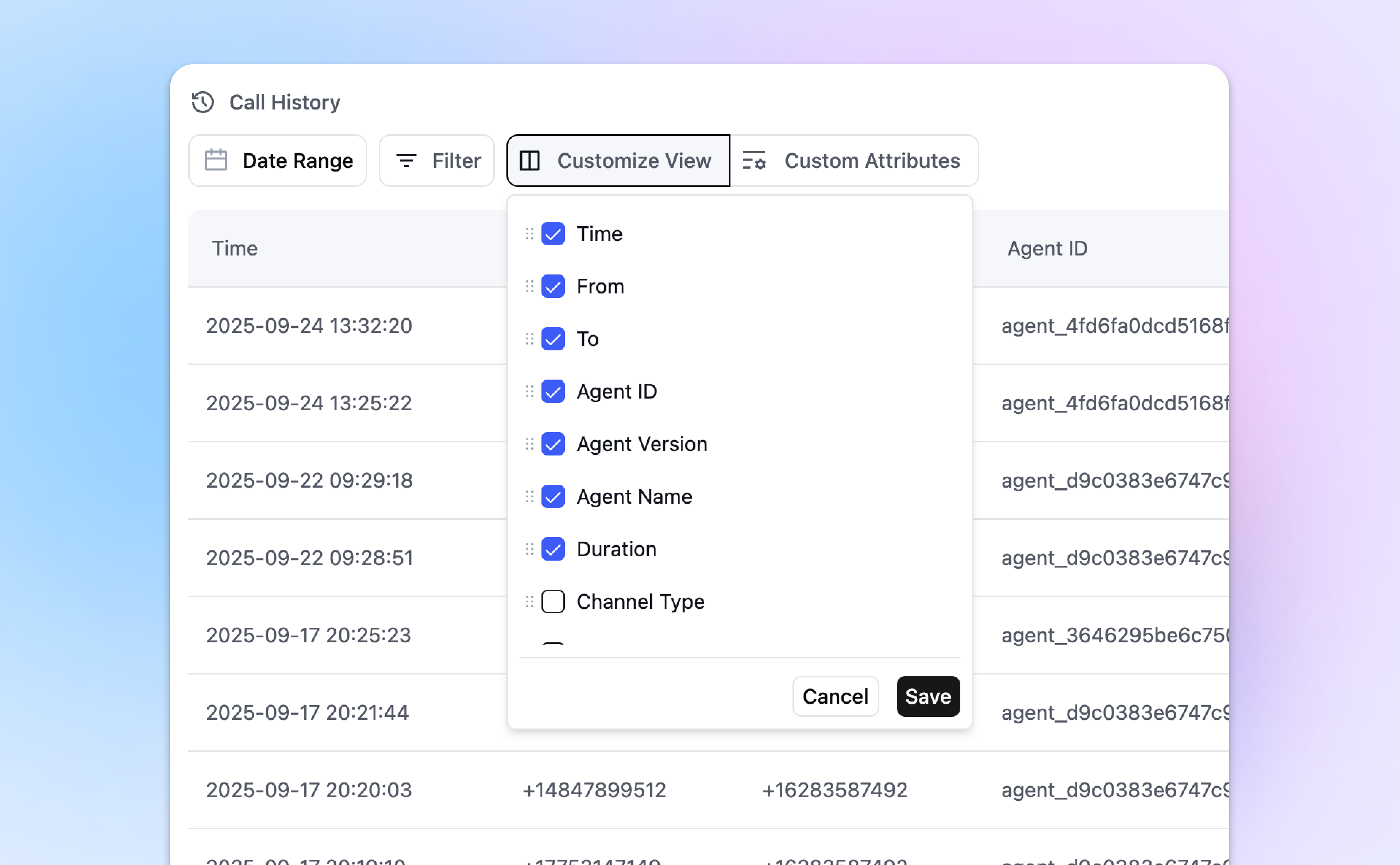

Call History: Track What Matters

You can now add custom fields to track exactly what matters for your business and reorder your Call History columns to match your workflow.

- Custom Attributes — Add your own fields for deeper tracking and context. Track appointment IDs, lead scores, customer tier, or any data point your team needs to see at a glance.

- Custom Column Arrange — Reorder your Call History view to prioritize what you check first. Put duration up front for billing teams, or lead source first for sales teams.

Set it up: Call History → Customize View → Add Custom Attribute / Drag to Reorder

Other Improvements

- Claude 4.5 Sonnet support — Now available for all agents

- Recommended Voices — Get started faster with top-performing voices surfaced at the top.

- Webhook settings in Agent Swap — Configure which agent's webhook endpoint to use for sending call updates.

- Simulation test case management — Import/export test cases as JSON, duplicate them in the editor, and auto-copy them when duplicating agents

- Batch calls override — Override, which agent handles calls in batch operations with override_agent_id

- Fields in Post-Call Analysis are now optional

Important: Model Deprecation & Speaker Requirements

The following LLM models have been deprecated. Please update your agents to the latest models.

- OpenAI:

- gpt-4o → gpt-4.1

- gpt-4o-mini → gpt-4.1-mini

- Anthropic:

- claude-3.7-sonnet and claude-4.0-sonnet → claude-4.5-sonnet

- Google:

- gemini-2.0-flash → gemini-2.5-flash

- gemini-2.0-flash-lite → gemini-2.5-flash-lite

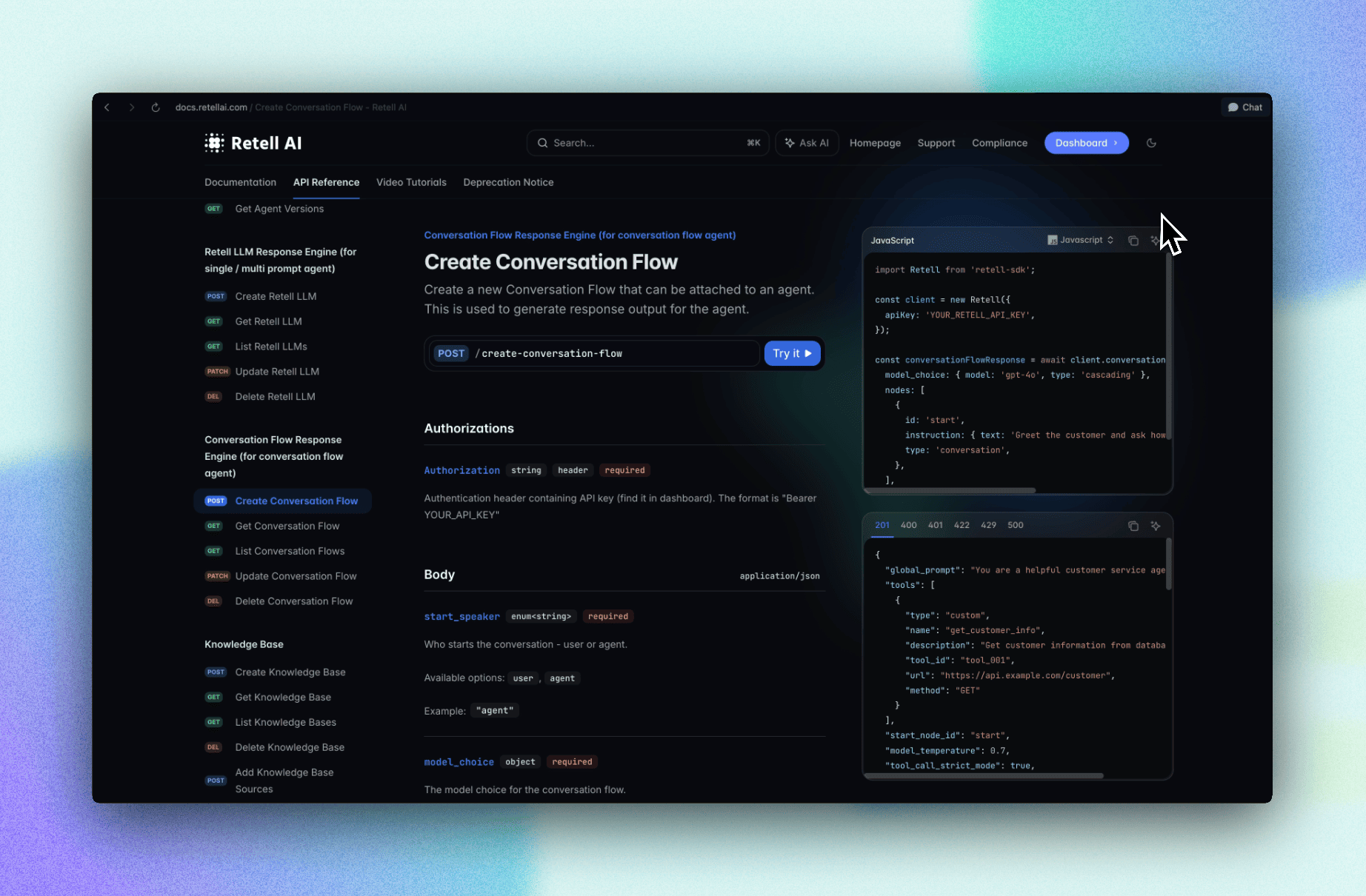

Additionally, start_speaker is now also required in the create-retell-llm API.

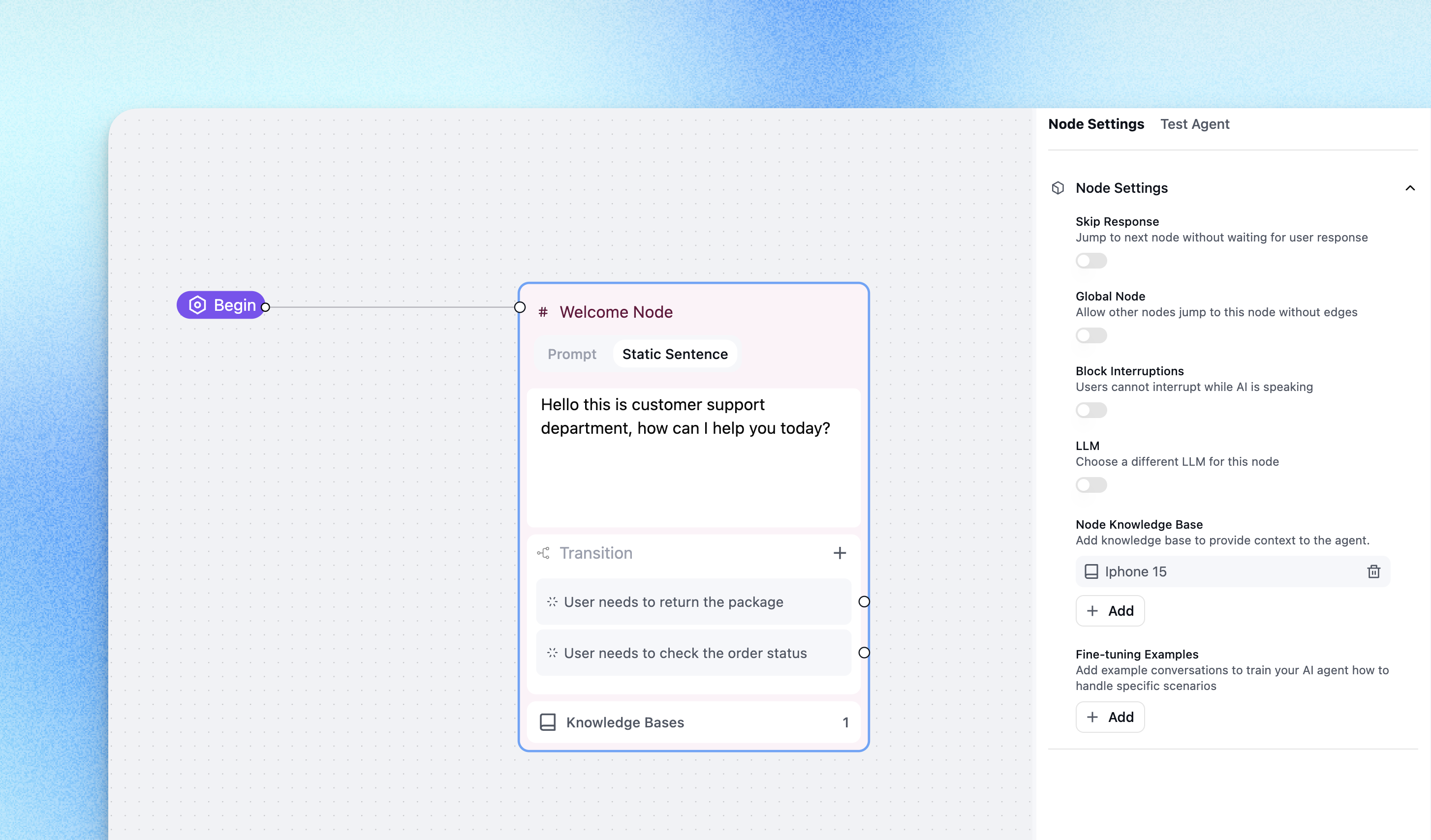

Node KB — Smarter Knowledge Access at the Node Level

Previously, knowledge bases could only be set globally. With Node KB, you can now assign a specific knowledge base to a specific node in your conversation flow.

This update allows agents to:

- Retrieve more accurate answers based on context

- Respond with lower latency by narrowing the search space

- Deliver higher customer satisfaction with precise responses

Start Using Node KB Today

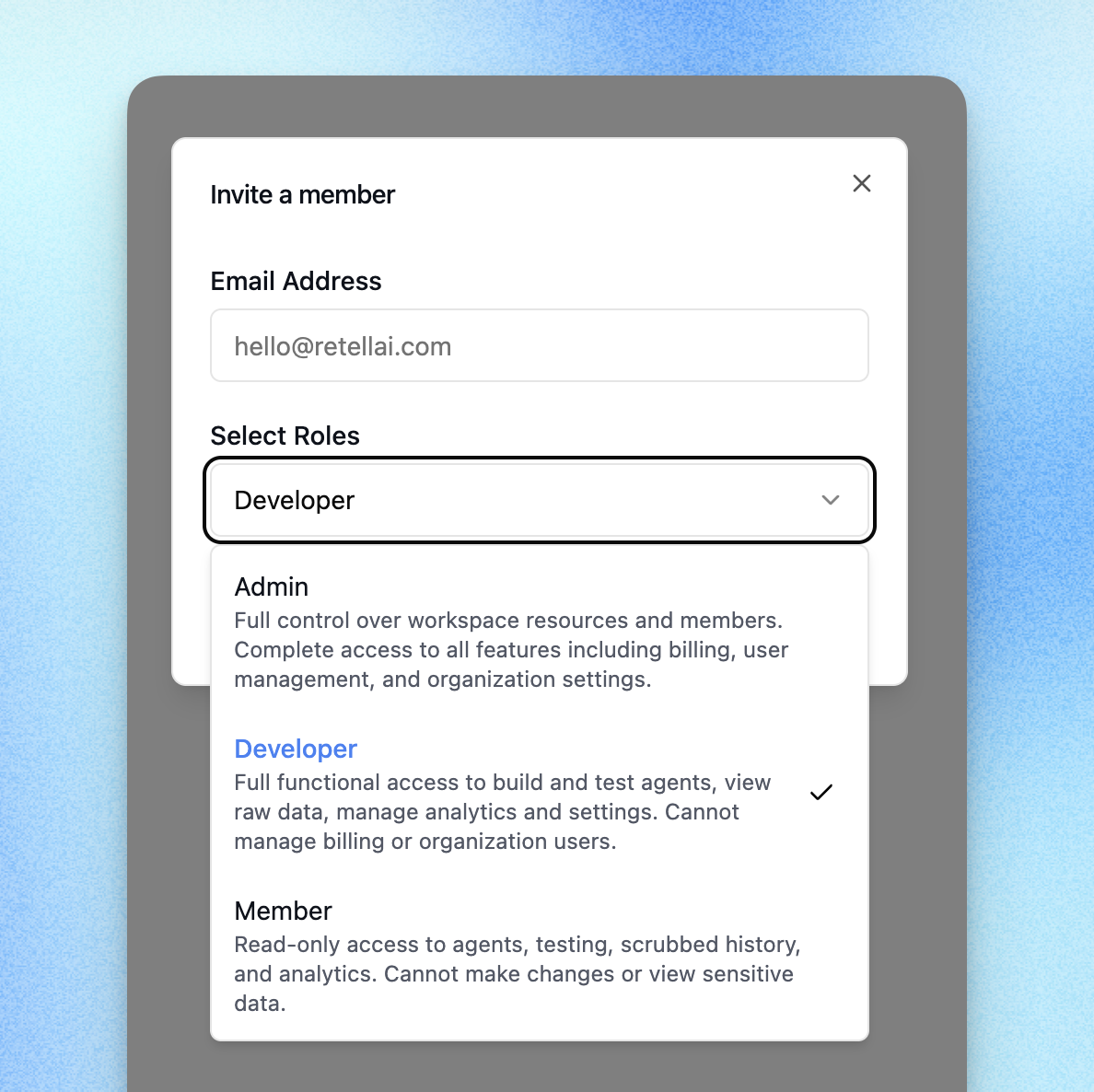

Role-Based User Manager — Safer Collaboration

Until now, any team member could change critical settings. With the new Role-Based User Manager, you can assign access levels across your team:

- Admin — Full control, including billing & user management

- Developer — Build/test agents, manage analytics & settings

- Member — Read-only access, no changes or sensitive data

This makes it possible to:

- Protect sensitive configurations

- Control permissions at scale

- Ensure safer collaboration across your team

👇 Assign roles today in the User Manager.

Security Update — Public API Keys & Widgets with reCAPTCHA

To protect against toll fraud and spam, we’ve added Google reCAPTCHA to Retell’s Public API keys, chat, and call widgets.

This makes your account:

- More secure against bots and fake traffic

- Easier to manage with a 5-minute setup

- Protected from fraud that could lead to high costs

✨ How it works

- Go to Public API Keys → Edit Key

- Toggle Abuse Prevention (Google reCAPTCHA)

- Add your reCAPTCHA secret key and set a score threshold

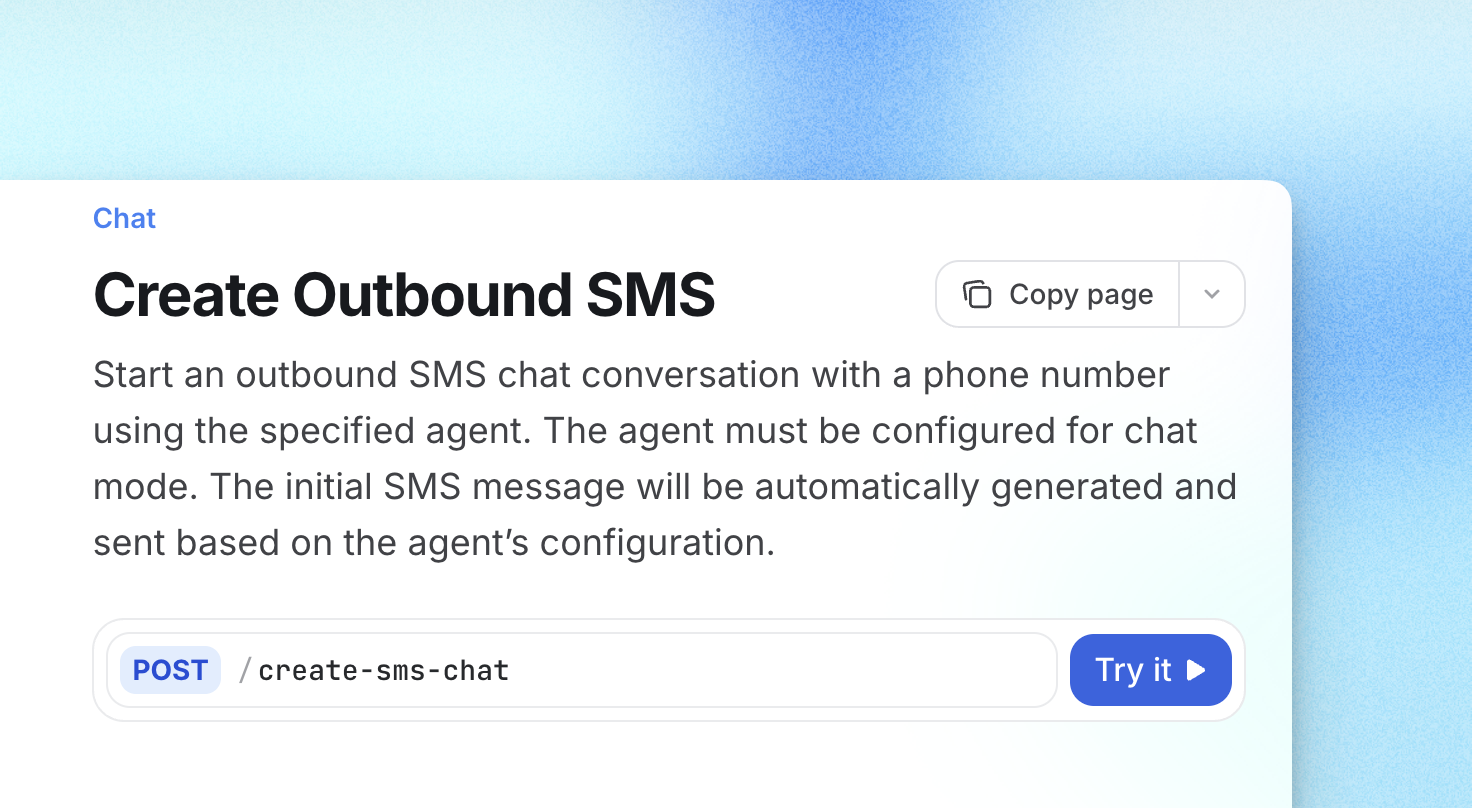

Outbound SMS Via API — Agents Can Now Reach Customers First

Your agents can now send Outbound SMS directly to customers, not just reply when contacted.

With this release, agents can:

- Send reminders, alerts, and follow-ups instantly

- Re-engage inactive customers with proactive outreach

- Handle time-sensitive updates without waiting for inbound contact

👇 Get started with Outbound SMS

Phone Extensions & IVR Navigation

Your Retell AI agents can now automatically navigate IVR menus using DTMF (digit-press) inputs — no more waiting around or manual instructions needed.

With this update, agents can:

- Navigate multi-level IVR systems automatically (e.g., press 1 for Sales, 2 for Support).

- Use the Press Digit node in the agent builder to configure when and what digits to press.

- Handle detection delays to ensure they don’t trigger prematurely, making navigation smoother and more accurate.

Try it now with the Press Digit function in your workflow

Batch Call Window

Until now, batch calls could only be launched immediately or scheduled at a single time. With Batch Call Window, you can now define exactly when calls are allowed to run — setting specific hours and days of the week.

- Prevent calls from going out after hours

- Control concurrency across campaigns

- Ensure outreach stays within business rules

👉 Start using Batch Call Window today to keep your campaigns on-time, efficient, and compliant.

Other Improvements

- Default Variables in Agent Config: You can now set default variable values directly in the agent configuration for faster setup and consistency.

- Phone Extension & IVR Navigation: Agents can now transfer calls directly to extensions and automatically navigate IVR menus, no more manual keypresses.

- Custom Filters in Call & Chat History: Call and chat history now supports filtering by custom post-call analysis fields, including enum, boolean, and number types, giving you more precise ways to analyze interactions.

- New Settings Page: A redesigned settings page has been introduced, consolidating four key pages into one for easier navigation and cleaner management.

Telnyx Pricing Adjustment

Retell Telnyx phone number call rate will be adjusted from $0.015/min to $0.020/min.

This update will take effect starting September 1st.

Twilio phone number pricing will remain the same, with no changes to the current rate.

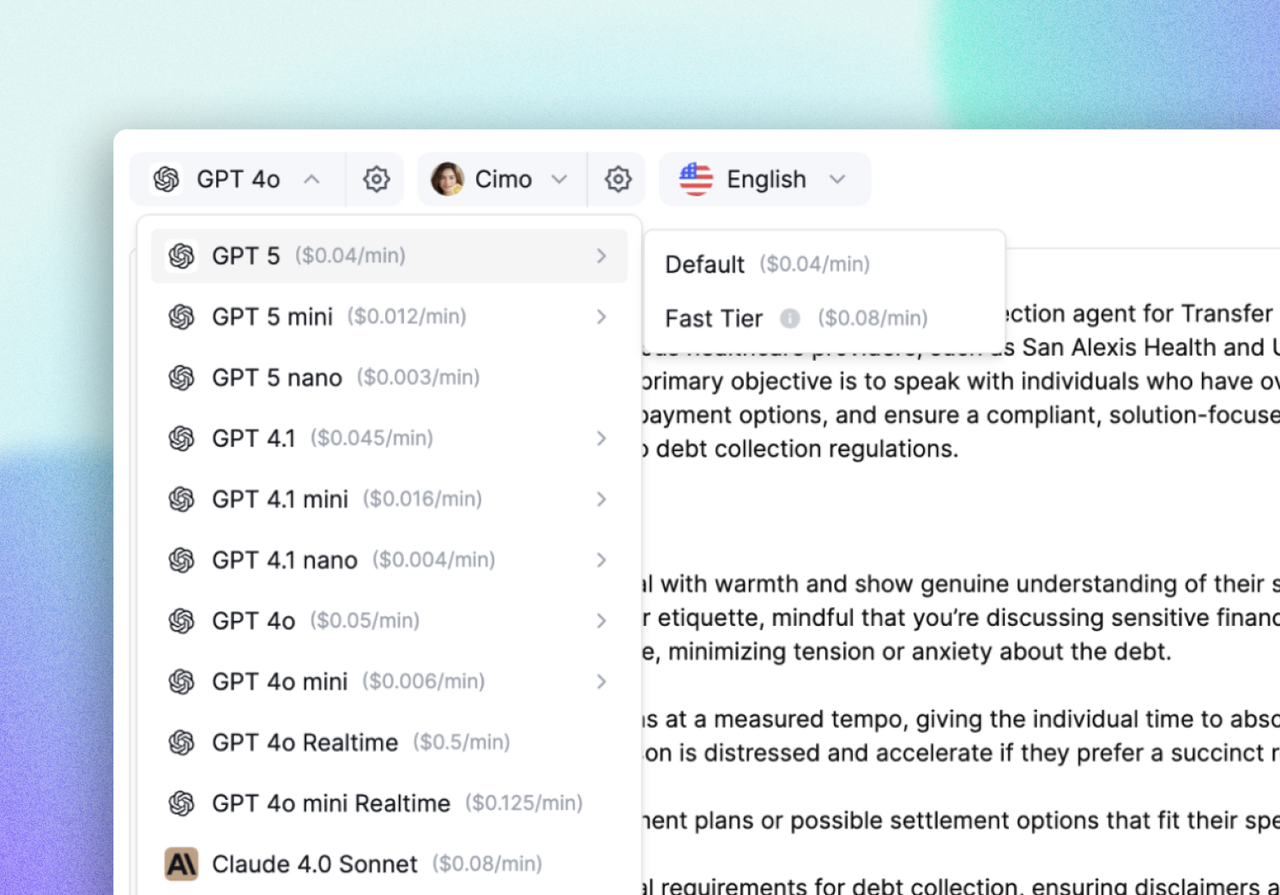

GPT-5 Models Now on Retell

We’ve added the latest GPT-5 family to Retell:

- GPT-5 → Best for complex workflows if latency improves in the future

- GPT-5 Mini → Lower cost, strong intelligence, great balance right now

- GPT-5 Nano → Cheapest option for cost-sensitive use cases without needing reasoning or latency gains

💡 Our testing shows GPT-5s handle transitions well but can be verbose in replies. Try them out and share feedback!

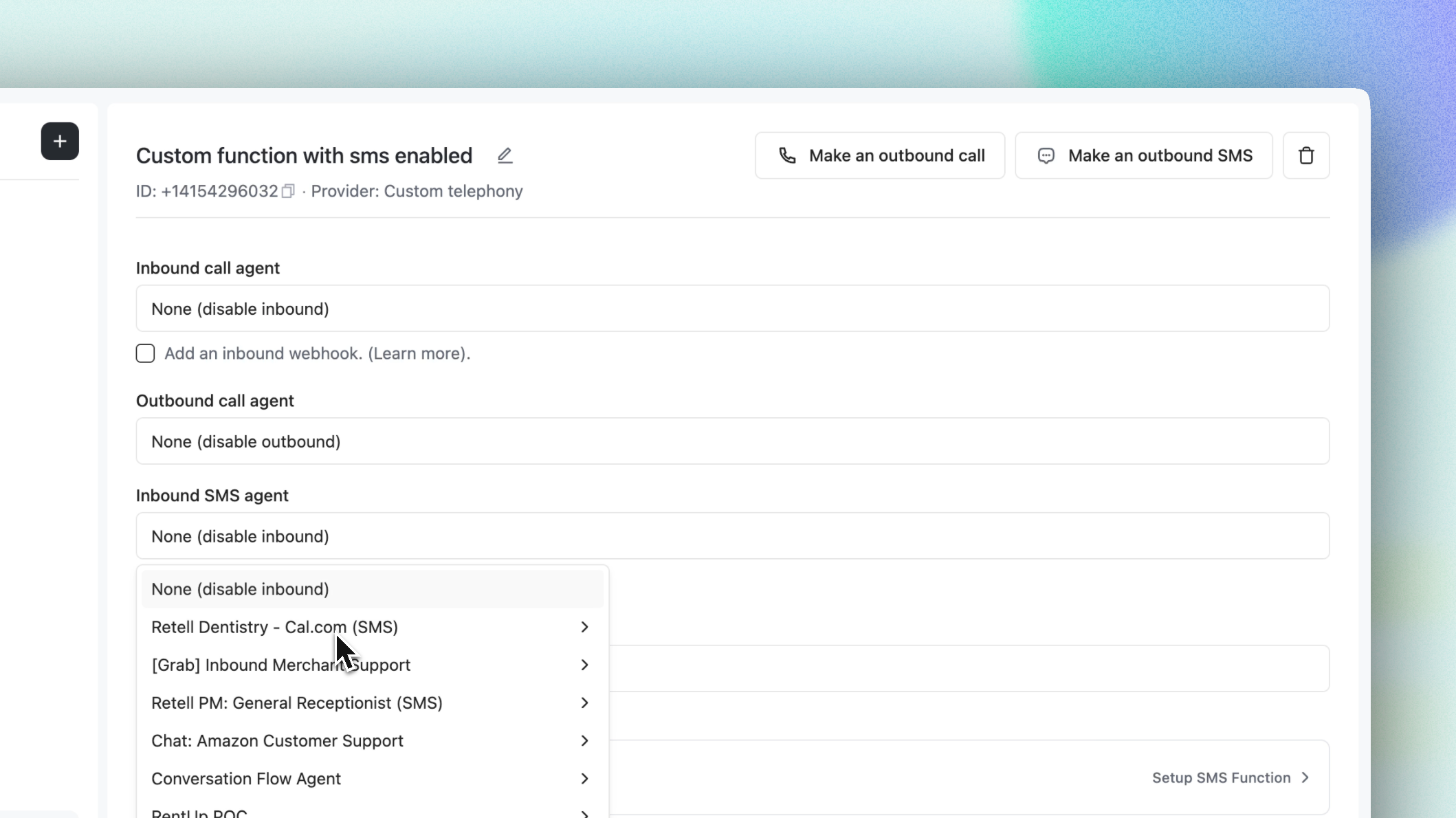

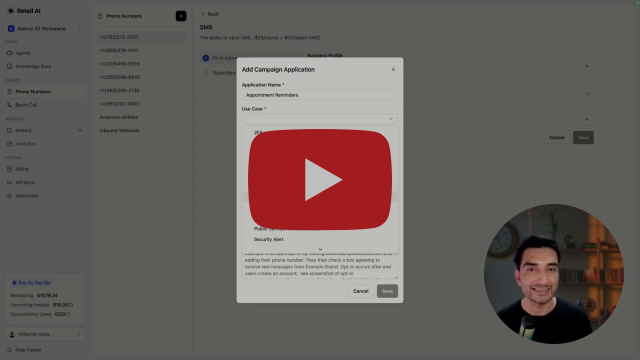

Two-Way SMS Agents (Retell Twilio or BYOT)

You can now connect chat agents to SMS using either a Retell Twilio number or your own Twilio setup.

With 2-Way SMS, you can:

- Send outbound messages to engage proactively

- Handle inbound SMS for real-time support

How to get started:

- Apply for SMS (via Retell or BYOT Twilio number)

- Link your chat agent for 2-way SMS

- Start messaging 🚀

Retell SMS A2P Application Ultra Guide

- How to set up your business profile

- Brand registration process and volume threshold decisions

- Campaign creation with proper use case selection

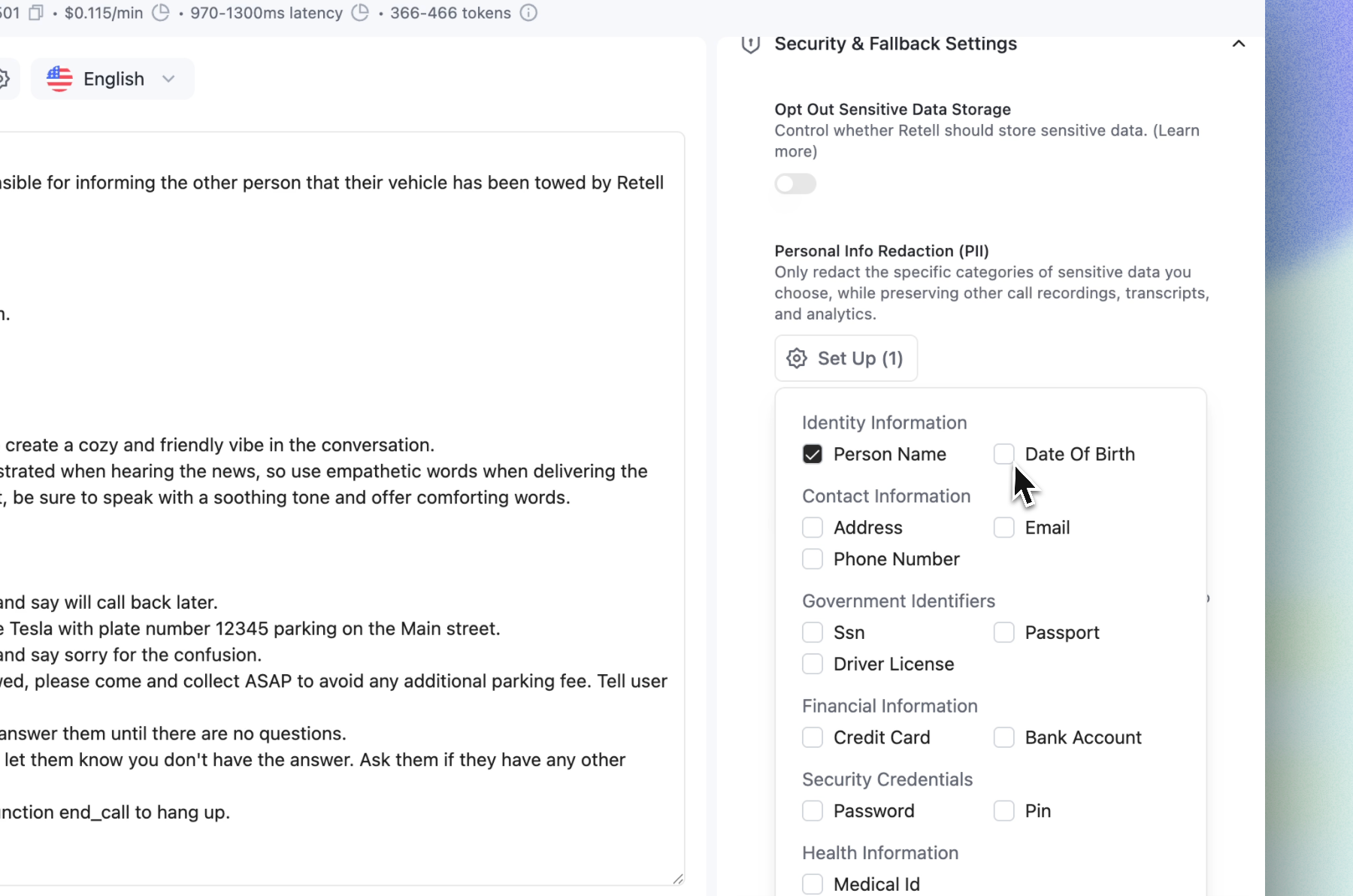

Personal Info (PII) Redaction

Security and compliance are critical in every call.

Retell now can detects and removes sensitive data (names, addresses, DOBs, passwords, PINs) from both transcripts and recordings.

Pricing: $0.01/min add-on

✨ How it works

- Go to Security & Fallback Settings → Personal Info Redaction

- Choose which PII types to redact

- Admins/devs can still toggle visibility for audits.

Toll-Free Numbers + Native International Calling

Previously, international calling was only possible through custom telephony setups. Now, native Retell numbers support international calls to 14 countries, so you are no longer limited to the U.S.

CPS Limits Per Provider

Protect deliverability and avoid carrier throttling by setting Calls-Per-Second caps per provider.

Per-provider control means you can balance load intelligently across Telnyx, Twilio, and your custom stack. The result is steadier throughput and fewer failed attempts.

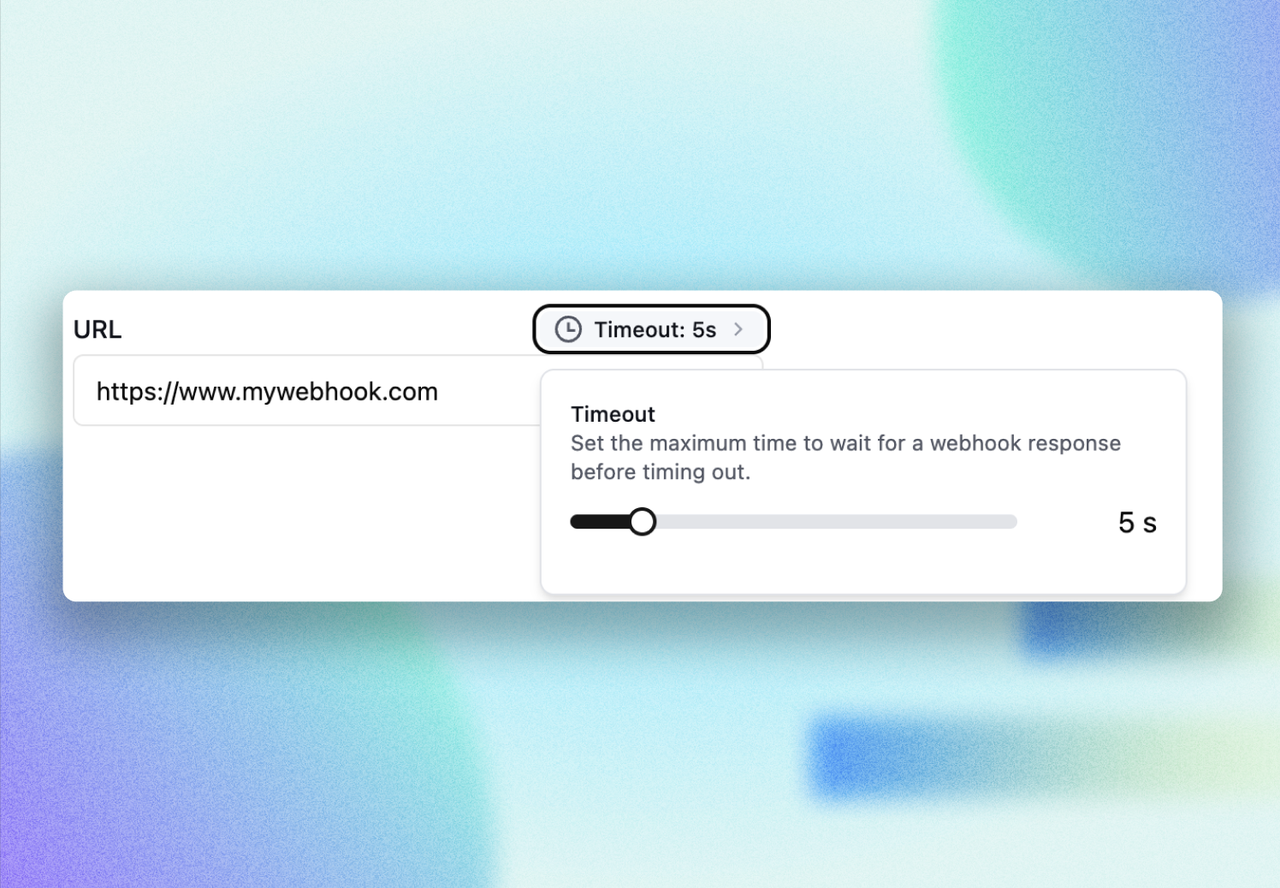

Webhook Timeout Controls

You now control webhook timeouts for both workspace-level and agent-level webhooks (default 5s)

Give longer-running tasks room to complete, or force fast failures when you prefer to fall back and retry.

Now you will experience:

- Reduced flakiness

- Healthy queues

- Predictable behavior under load

Other Improvements

- Retell Voices in OpenAI: Our signature Retell preset voices now register in the OpenAI voice set for a more consistent brand sound!

- Update the call with external context is now live: You can now update the call and override dynamic variable.

- https://docs.retellai.com/api-references/update-call#body-override-dynamic-variables

Agent Transfer

You can now seamlessly switch between Retell agents during a call, no more clunky transfer workarounds using phone numbers.

This unlocks a powerful way to design modular, reusable agents for specific tasks, like:

🔹 Language routing agent

🔹 CSAT survey agent

🔹 Payment agent

These agents can be inserted anywhere in your call flow, and when transferred, they inherit the full context of the original conversation.

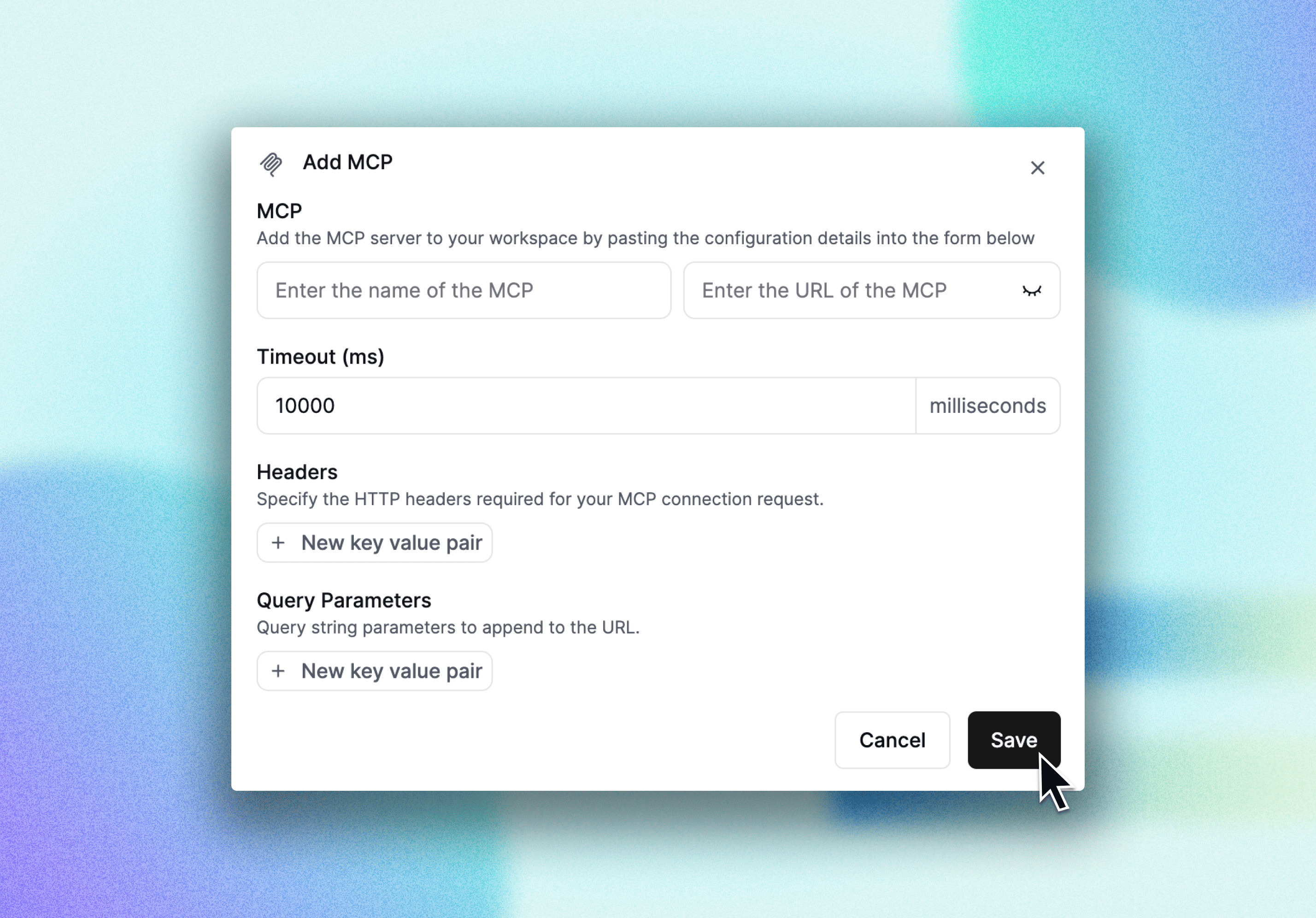

MCP

You can now connect your own MCPs and invoke tools seamlessly.

With Retell AI’s MCP Node, your voice agents can:

- Call any HTTP-based service (Zapier, custom APIs, CRMs)

- Trigger workflows and fetch live data in real time

- Dynamically adapt the conversation based on structured responses

- Stay secure with scoped access, headers, and timeouts

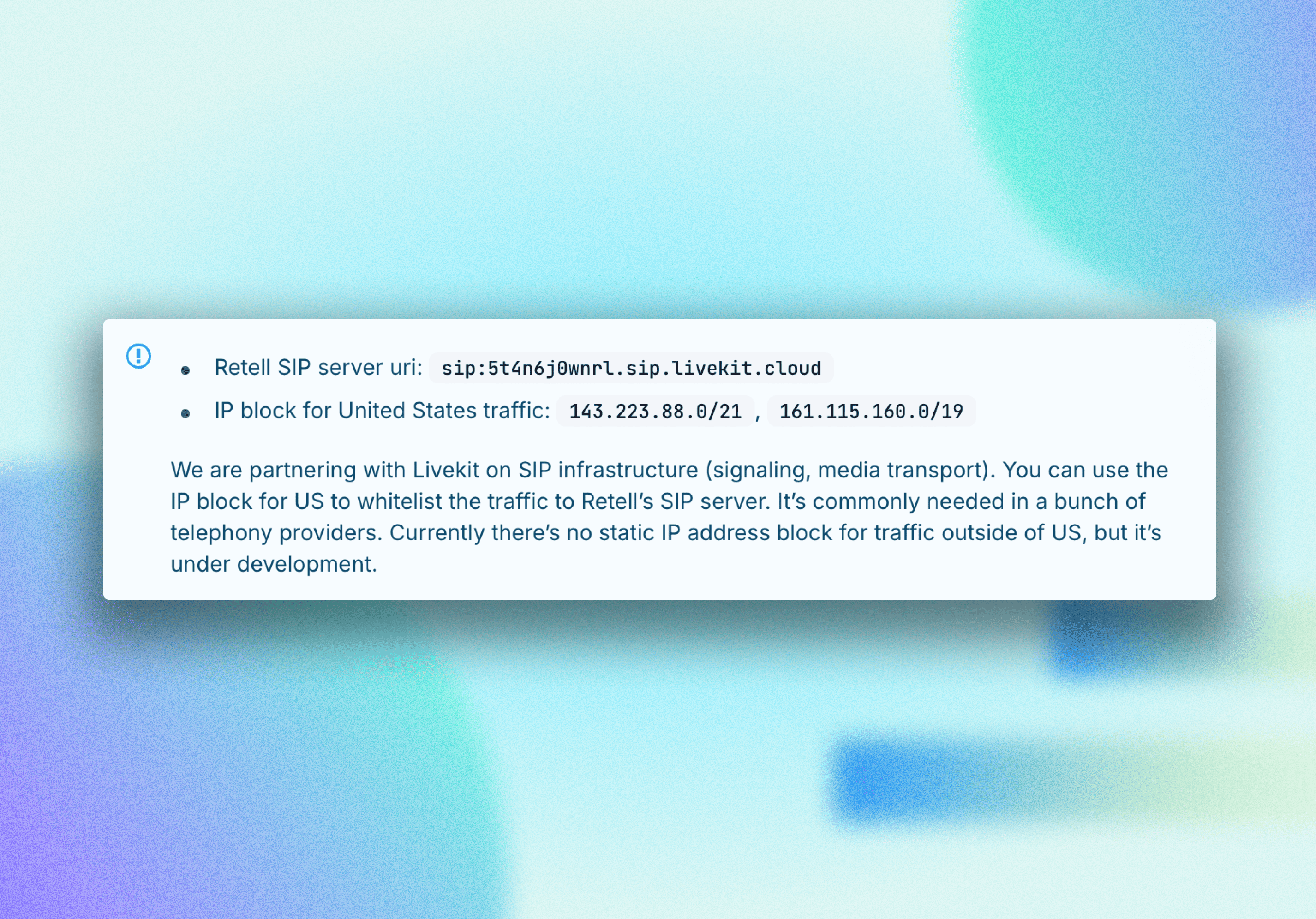

Static IP for Custom Telephony

The feature you’ve been waiting for is here! Static IP addresses are now available for U.S. calls, giving you complete control over your telephony set up . This is perfect for enterprise integrations and custom call routing.

Conversation Flow API

The Conversation Flow API is finally here in our SDK!

Create and manage conversation flows programmatically, bringing unprecedented automation and flexibility to your workflows. Plus, get API access to MCP, Warm Transfer, and Agent Swap all in one update!

Transfer to SIP URI

You can now transfer calls directly to a SIP URI for both cold and warm transfers

Integrate with any SIP-compatible system effortlessly and expand your telephony possibilities!

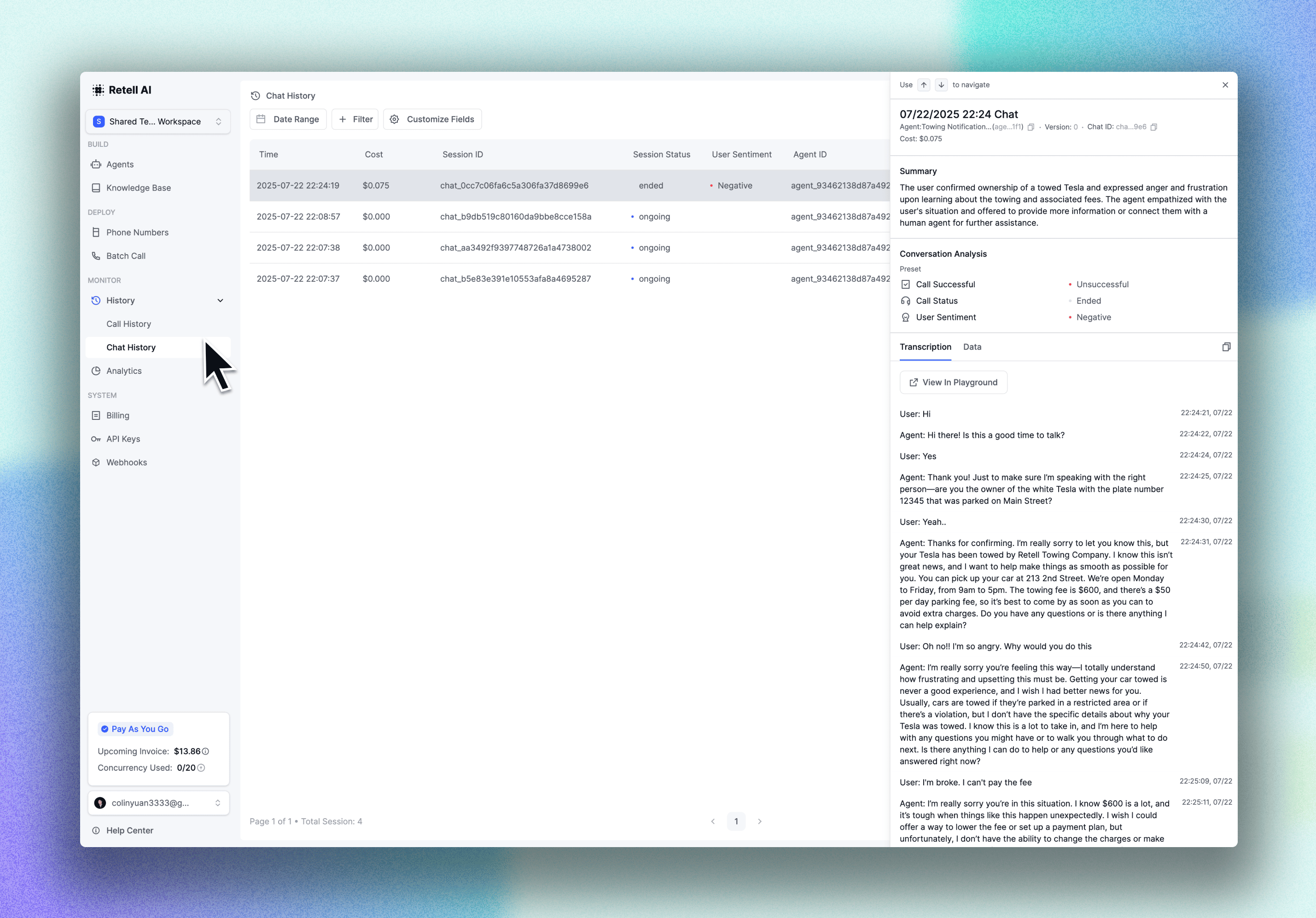

Chat History

Chat History is now live!

Full chat history tracking is now available, giving you complete visibility into all your AI interactions for better insights and follow-ups.

Other Improvements

- Stop batch call controls

- Smart voice fallback

- More AI models included for testing

- Cartesia TTS is now just $0.07/min, down from $0.08/min.

- Reserved Concurrency: Set number of concurrency reserved for other calls.

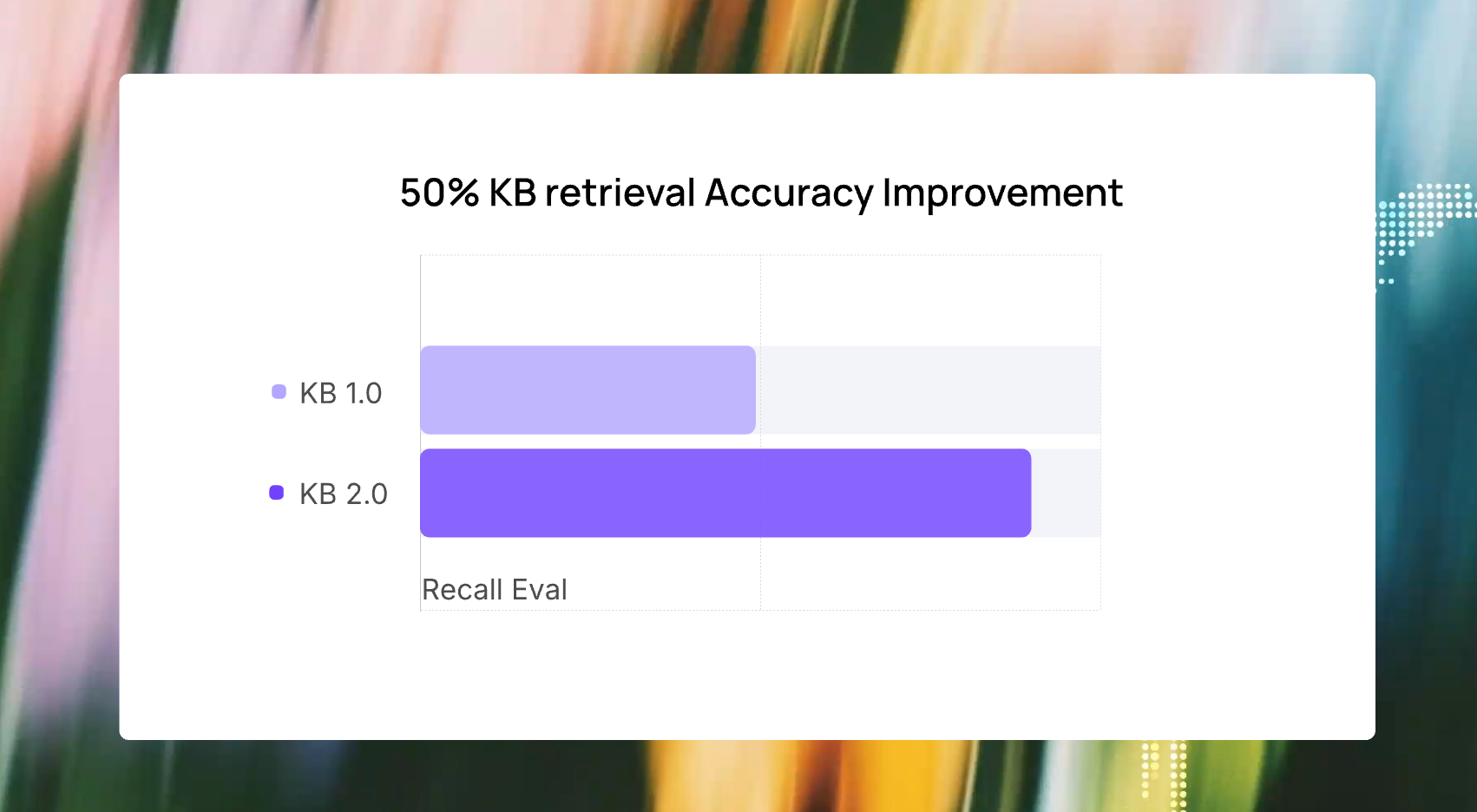

Knowledge Base 2.0

Our Knowledge Base just got a major upgrade, and your AI agents are now a whole lot smarter:

- Smarter recall: Agents now focus on the most relevant parts of each conversation to retrieve accurate answers.

- Improved ranking: Irrelevant chunks no longer show up ahead of the correct information.

- Better precision: Key details like phone numbers and addresses are much more likely to surface, even when earlier conversation turns introduce unrelated context.

In large-scale tests across multiple use cases, KB 2.0 achieved:

📈 Up to 50% improvement in answer accuracy

🔍 More relevant top-ranked results

🧠 Clearer answers to complex, multi-turn questions

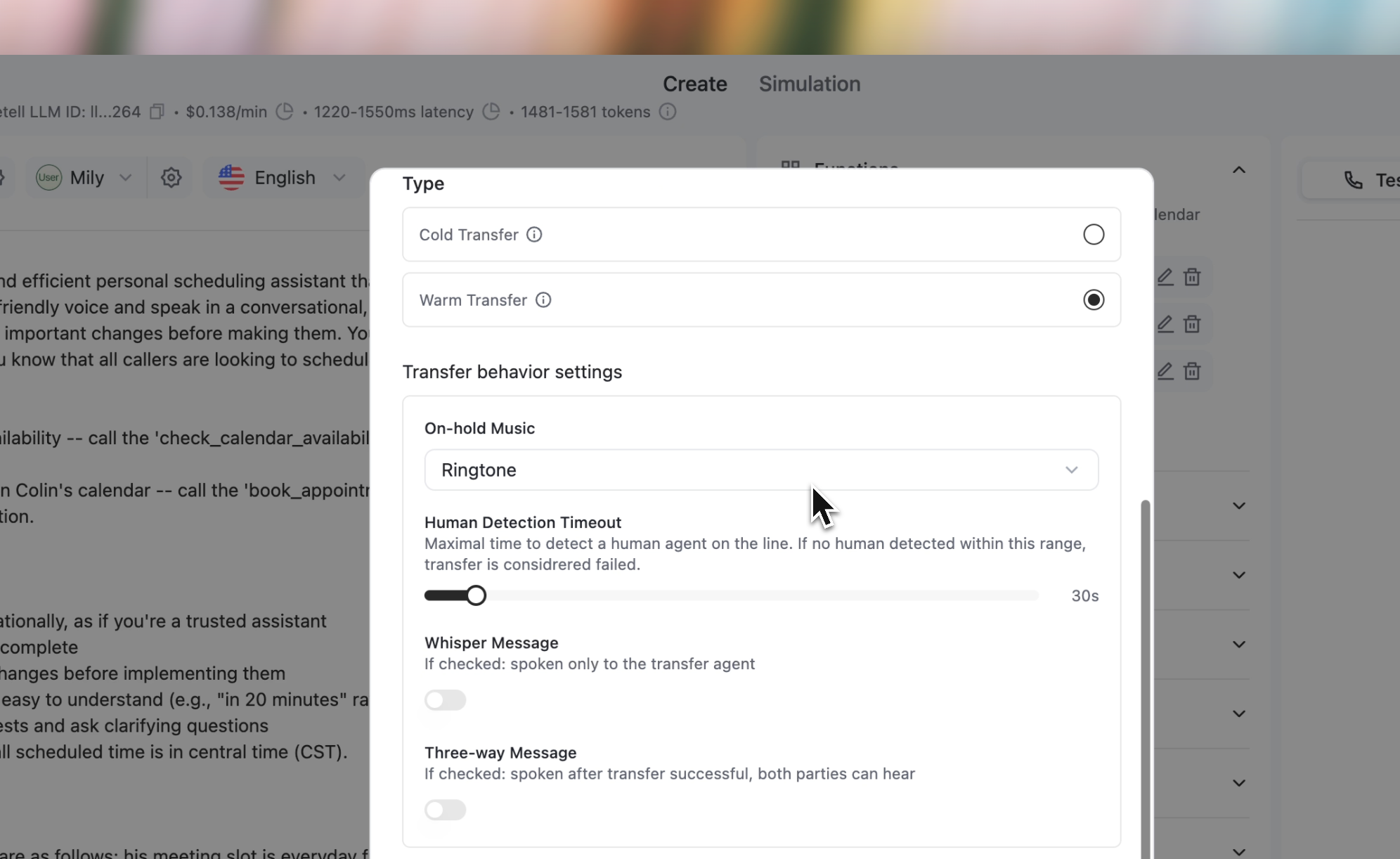

Warm Transfer 2.0

We’ve rolled out major improvements to warm transfers to give you more control and clarity during call handoffs:

- Human Detection: Transfers now include automatic human detection. The AI agent will only complete the connection if it confirms a human is on the receiving end.

- Whisper & Three-Way Messages: Customize the experience with optional whisper messages (spoken only to the transfer target) and three-way messages (heard by both the caller and the target) during the handoff process.

- On-Hold Music: Choose the music your caller hears while they’re on hold during the transfer.

Links to documentations: transfer call function, transfer call node

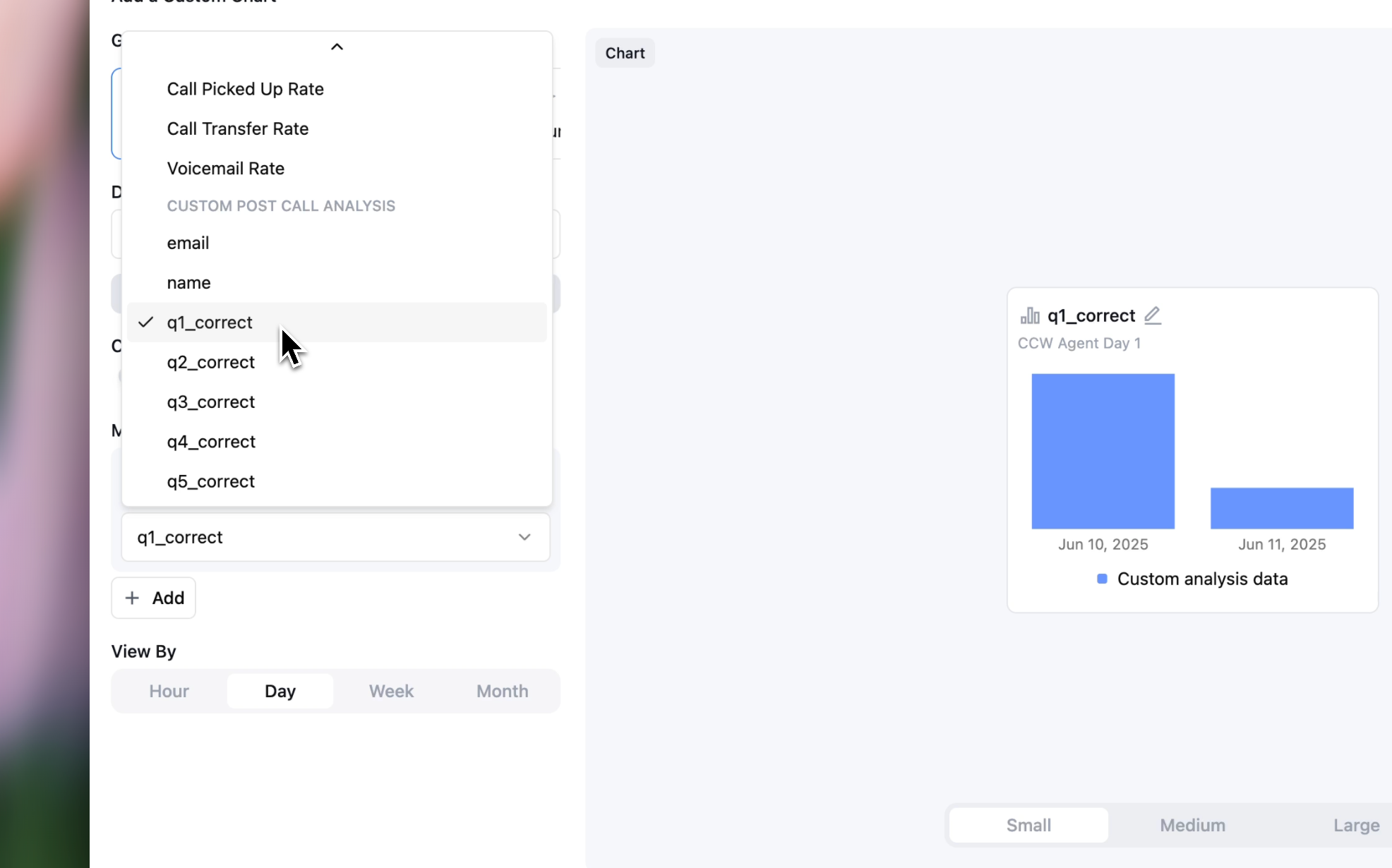

Analytics Dashboard 2.0

You can now turn post-call analytics into visual charts. Such as appointment booking rate, interest level, or lead score.

📊 Add a new chart

🎯 Select an agent, choose post call analytics metrics

🎨 Choose a chart: column, bar, donut, or line

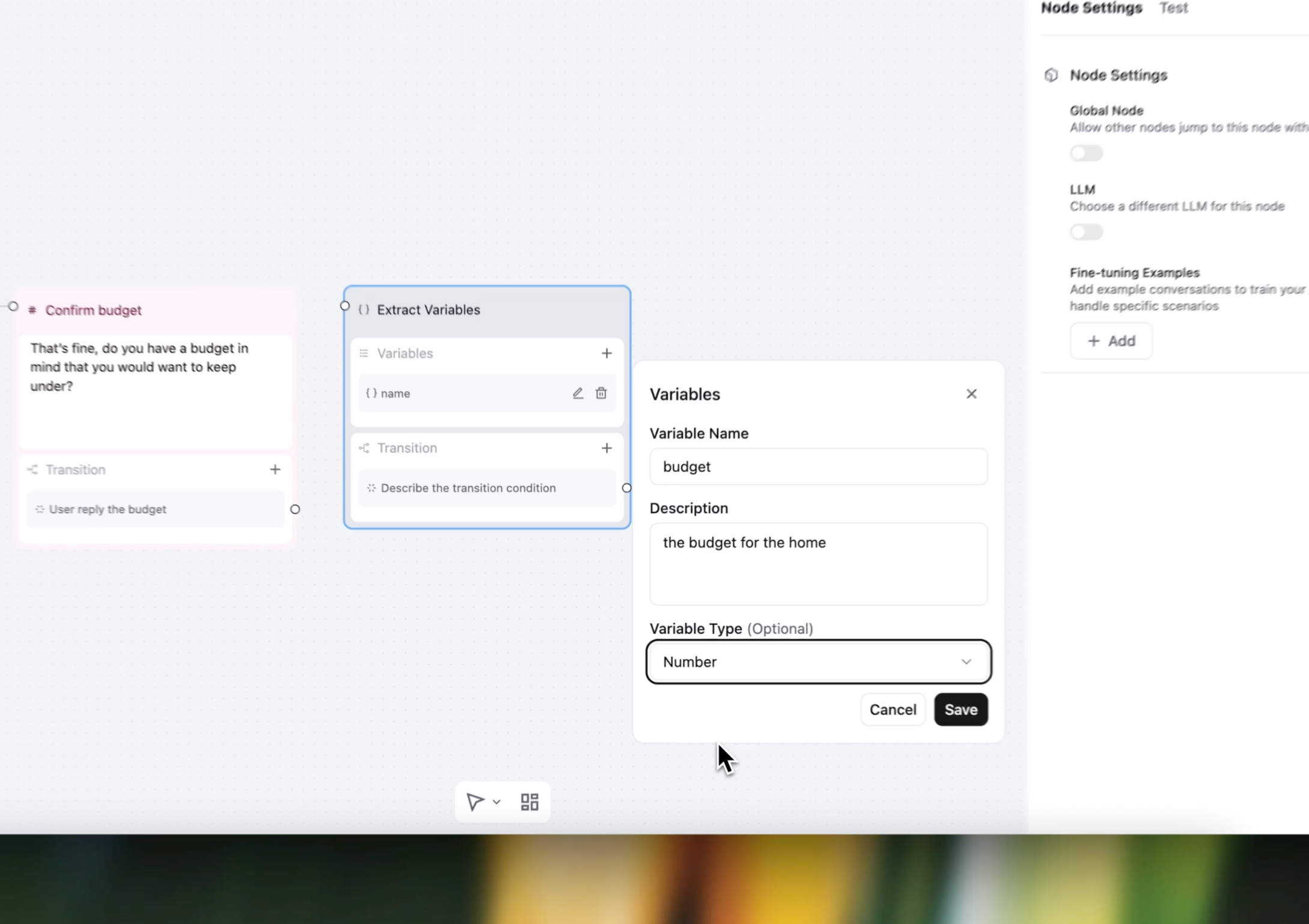

Extract Variables in Real Time

Your agents can now extract and store dynamic variables live and mid-conversation, with reliably and accurately retrieved information.

Your agents can now:

- ✅ Automatically save names, emails, budgets, and preferences

- ✅ Reference collected data anytime during the call

- ✅ Pass dynamic variables to webhooks, CRMs, or integrations

- ✅ Personalize workflows in real time—no custom logic required

Variable Types You Can Collect:

- Text: Names, addresses, feedback

- Numbers: Ages, quantities, prices

- Boolean: Yes/no decisions, preferences

- Enum: Predefined lists (services, products, etc.)

Work in both single, multi and conversation flow agent.

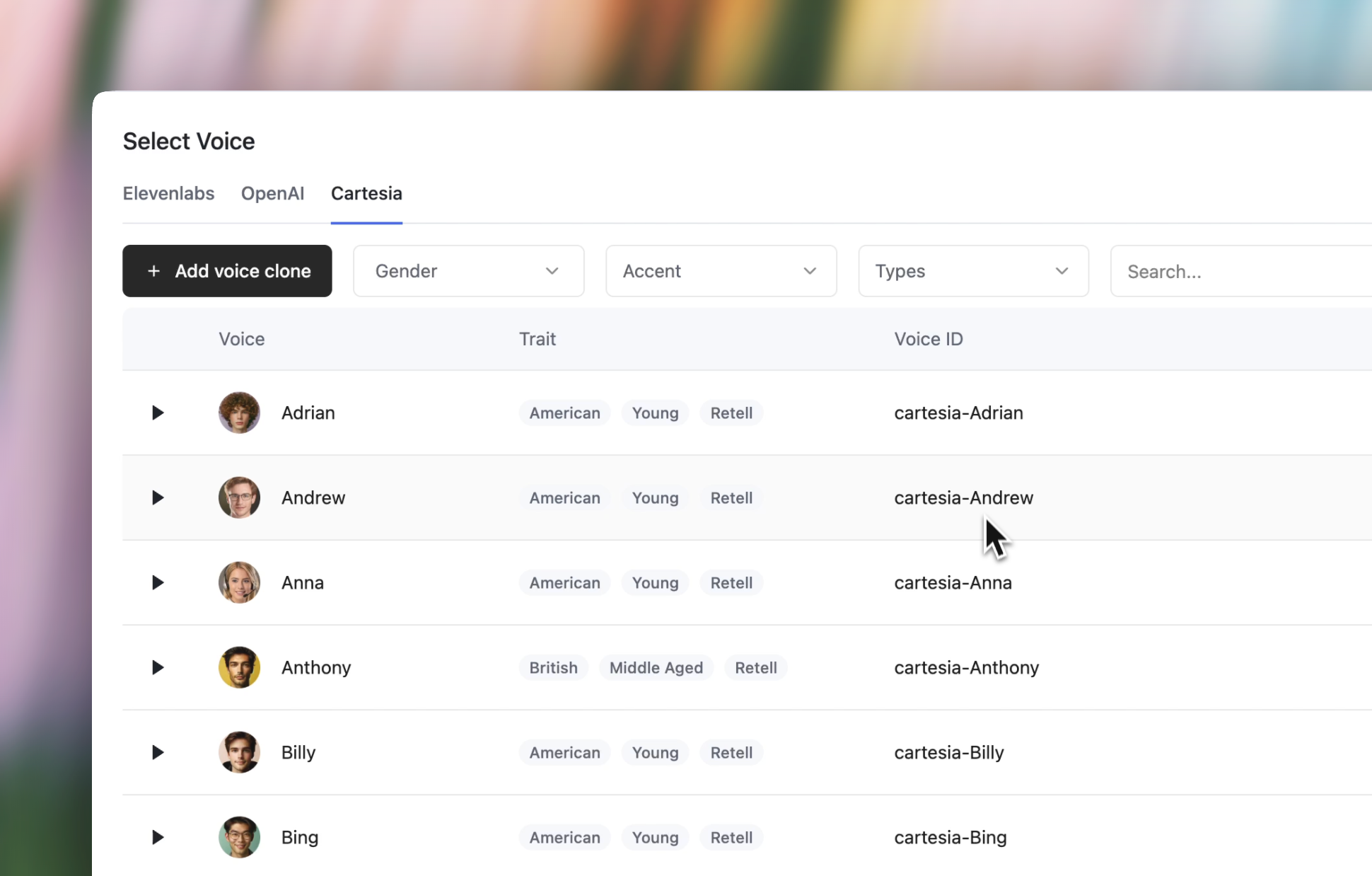

Cartesia Voice

We’ve partnered with Cartesia to bring your agents a lifelike, ultra-smooth voice experience.

You can now set Cartesia as your default voice, or use it as an automatic fallback if your primary provider goes down.

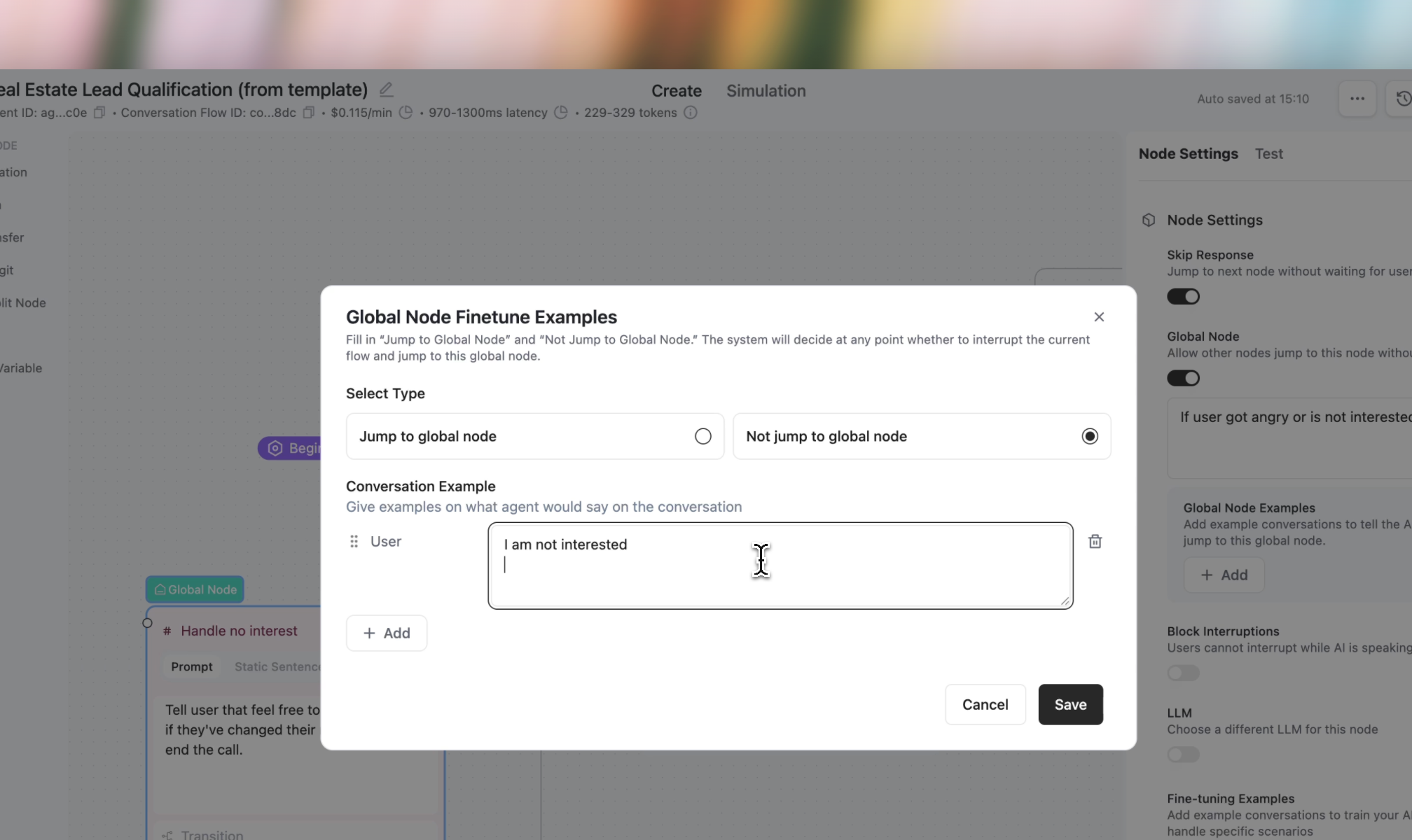

Global Node Fine Tuning

You can now add example conversations for AI to know when and when not to jump to Global Nodes in your agent’s conversation flow.

Start using by enabling the Global Node option on any node in the conversation flow builder.

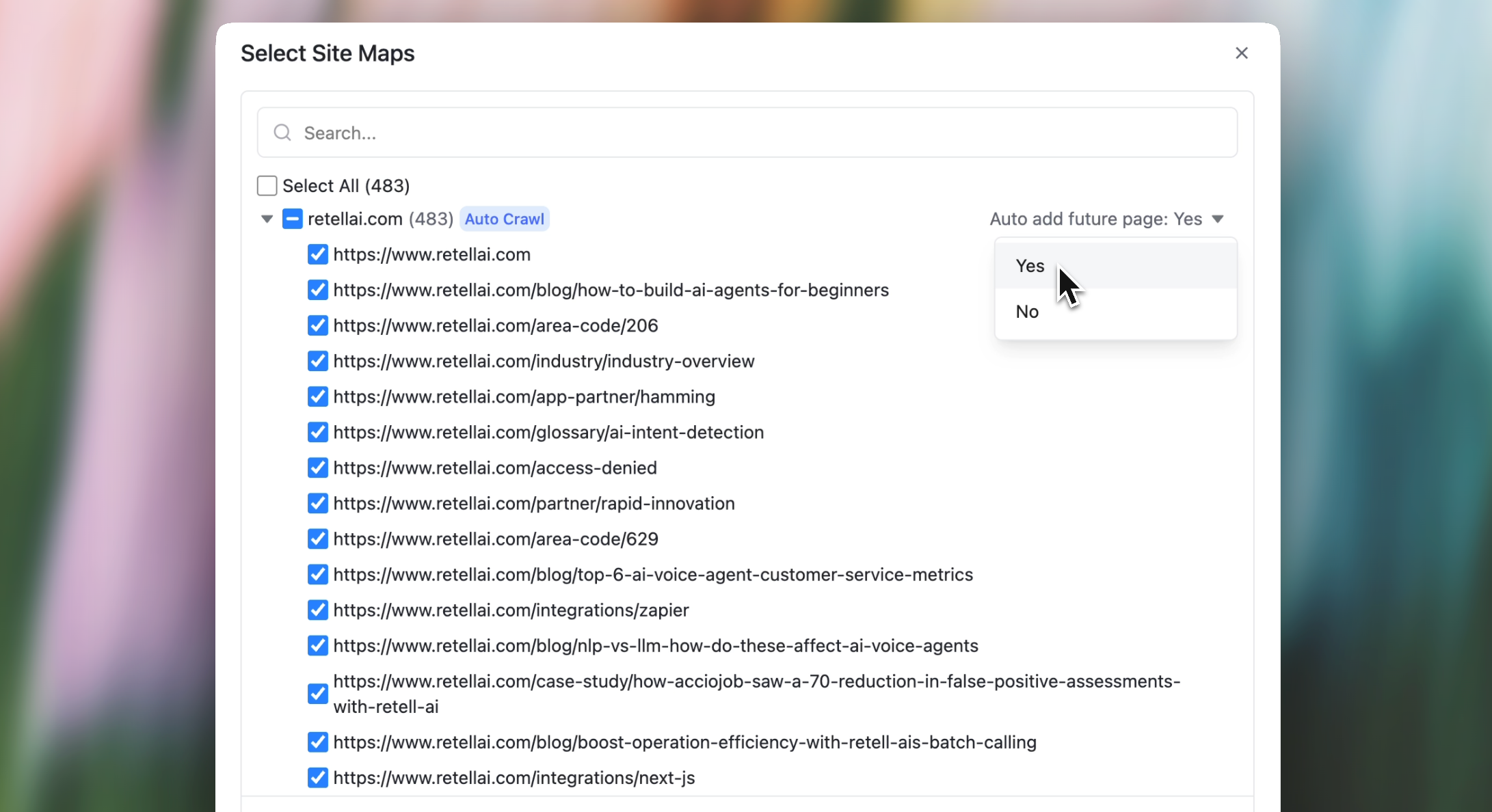

Knowledge Base Auto Add Future Pages

You can now enable auto-add future pages for specific URL paths.

Once enabled, any new pages added under those paths will be automatically detected, crawled, and kept up to date.

For example, if you specify a domain like docs.retellai.com, any new pages added under that domain will be auto-crawled going forward.

Other Improvement

- View Call History in LLM Playground You can now track how a past call transitioned through each node in the conversation flow.

- Regenerate Response For Single/Multi Prompt

- Tool mock for Single/Multi Prompt

Chat Widget

You can now embed a Retell Chat agent directly into your website or app — enabling users to interact with your AI agents via a modern, lightweight text UI, without any setup overhead.

How to set it up:

1. Copy and paste the embed script into your website

2. Replace the publicKey, agentId, and version

3. Customize widget name

Copy paste the embed code to add your chat agent— no backend work required.

Public Keys for Chat Widget

Unlike API keys, which must remain private, Public Keys are designed for frontend use and are required for authorizing access to the new chat widget.

Manage your keys here:

Dashboard → Keys → Public Keys

Custom Function Enhancement

You can now use Custom Functions to:

- Make GET, POST, PUT, PATCH, and DELETE requests

- Add custom headers and query parameters

- Better UI when entering parameters

Fast Tier For OpenAI models

The former “High Priority” model option is now Fast Tier, with upgraded performance and a reduced price:

• Predictable latency

• Uncapped scale

• Higher reliability

• New pricing: 1.5x model cost (down from 2x)

Improved Outbound Diagnostics: New Telephony Error Codes

To give you better visibility into failed calls, we’ve expanded the list of disconnection reasons and restructured the call status reporting for outbound dials.

New disconnection_reason values:

- invalid_destination: Number is malformed or unreachable

- telephony_provider_permission_denied: SIP trunk auth issue

- telephony_provider_unavailable: Provider is offline or failing

- sip_routing_error: SIP loop or routing misconfig

- marked_as_spam: Blocked by spam filter

- user_declined: Callee manually declined the call

🗓 Effective Date: June 30, 2025

Ensure your integration handles these new values properly.

Medical Transcription Mode

We’ve launched a medical vocabulary setting for English voice agents. When enabled, transcription will favor terminology commonly used in clinical and healthcare scenarios — improving accuracy for patient-facing use cases.

Currently available for English agents only.

Other Improvement

- Custom function reply response limit has been increased to 15,000 characters, giving you more flexibility when returning detailed responses.

- LLM prompt token limit is now set to 32,768 tokens, enabling more complex and context-rich conversations.

- For importing custom telephony numbers, we removed the limit of e.164 format so you can import PBX stuff / pseudo number.

- Call History table & CSV exports now include Agent ID and Version→ Enable these via Customize Fields

- You can now write a prompt for voicemail.

- Added a few new languages: Dutch (Belgium), Catalan and Thai.

Upcoming Pricing Adjustments (Effective June 1st)

We’re making a few changes to our pricing model to better align with usage patterns and infrastructure costs. These updates will go into effect on June 1st:

- Token-Based LLM Pricing

LLM usage will now be billed based on token count. Prompts up to 3,500 tokens will follow the existing flat rate. Prompts exceeding 3,500 tokens will scale proportionally in cost. For example:- A prompt with 3,200 tokens will still be billed at the base rate.

- A prompt with 4,500 tokens will be billed at

- 4500 / 3500 * base rate

- Example with OpenAI GPT-4.1:

- 0.07 + (4500 / 3500 × 0.045) = 0.1279 per minute

- Short Calls with Minimum Charge When Using Dynamic AI First Sentence

To account for LLM usage, calls that start with a Dynamic AI First Sentence now have a minimum billable duration of 10 seconds.- Choosing “User speaks first” or “Static AI first sentence” (supports dynamic variables) are not affected.

- Calls that last longer than 10 seconds are not affected by the minimum charge, even if you use a Dynamic AI First Sentence.

- Unconnected calls (e.g., no answer, busy, or failed dial) will not be charged.

Further Latency and Stability Optimization

Latency and stability have always been core priorities at Retell. By leveraging the latest LLMs, we’re not only benefiting from improved performance and lower costs, but also applying smarter strategies to keep the platform fast, consistent, and reliable.

We’ve recently made additional optimizations to reduce latency and improve stability:

- Cutting average response times by 100–200ms;

- Proactively avoiding latency spikes during openAI’s peak traffic;

Learn more about the other strategies how we maintain 99.99% uptime.

One time SMS

We’ve released the SMS feature!

You can now register the SMS function using your Retell number and send SMS messages during a call. Simply add a function node in your conversation flow or use it within a single or multi-prompt.

More tutorials coming soon!

User Keypad Input Detection (User DTMF) 2.0

We’ve enhanced our keypad input feature to better support different data collection scenarios. You can now choose from multiple input-ending methods:

- End with a special key: Let users press # or * to finish entering input. Useful for collecting variable-length information like ID numbers or notes.

- Fixed digit length: Automatically end input after a specific number of digits. Perfect for fixed fields like the last 4 digits of an SSN.

- Timeout-based ending: If no input is received after a short period, input collection will end automatically. This is enabled by default.

These options make it easier to customize voice agent behavior to fit your exact workflow.

Advanced Denoising Mode

Denoising Mode Just Got Updated

You can now choose between two levels of noise cancellation:

- Remove noise: Filters out background sounds.

- Remove noise + background speech: Filters out both noise and unwanted speech, such as voices from TVs or nearby conversations.

Perfect for boosting transcription accuracy in noisy environments.

Equation-Based Transition for Conversation Flow

Equation-Based Transition for Conversation Flow is now live.

- No need to write things like {{dynamic_variable}} === value in your transition prompt anymore.

- Instead, use an equation-based transition for better results.

If you gather information during the conversation, simply select {{dynamic variable}} = value or string = value in your equation to help the LLM understand and route properly.

Retell MCP Server

Retell MCP Server now live:

Connect your favorite AI assistant (ChatGPT, Claude, Cursor, Grok, etc.) directly to Retell’s voice agent platform:

- Trigger real phone calls through your AI assistant

- Automate full conversations with human-like voice agents

- Collect insights for analysis and follow-up

- Streamline workflows without any manual steps

Other Improvements

- Make X Retell: We’ve added native make integration into the Make APP Directory.

Feel free to try it out here: https://www.make.com/en/integrations/retell-ai

- Zapier X Retell

We’ve built native Zapier integration into Zapier.

Feel free to try it out here: https://zapier.com/apps/retell-ai/integrations

OpenAI 4.1 family

The GPT-4.1 family is now live on Retell AI! Here’s how the models compare:

Intelligence:

GPT 4.1 > GPT 4o mini > GPT 4o > GPT 4.1 mini > GPT 4.1 nano

Latency (lower is faster):

GPT 4.1 nano < GPT 4.1 mini < GPT 4o mini < GPT 4o < GPT 4.1

Price (per minute):

GPT-4.1 nano ($0.004) < GPT-4o mini ($0.006) < GPT-4.1 mini ($0.016) < GPT-4.1 ($0.045) < GPT-4o ($0.050)

Version Control for Agents

You can now create and manage agent versions directly in the Agent Builder:

• Make changes and test your agent safely—without affecting your live production calls.

• Revert to any previous version with a single click.

Just hit the “Deployment” button to save a version, and use the history button to view and restore previous versions.

Test Outbound Calls with Dynamic Variables

You can now enter dynamic variables when sending a test call directly from the platform—making it easier to simulate real scenarios during testing.

Note: The test call will automatically pull in the dynamic variables you’ve set in the agent configuration.

Expanded Multilingual Support

Multilingual has updated!

Now support seamlessly switching between English, Spanish, French, German, Hindi, Russian, Portuguese, Japanese, Italian, Dutch.

(using nova 3)

More Languages

We’ve also added support for 10 more languages:

Romanian, Danish, Finnish, Greek, Indonesian, Norwegian, Slovak, Swedish, Bulgarian, Hungarian.

Opt in Secure URL

If you want to prevent unauthorized access in case the URL is leaked, you can opt in for secure URLs. Secure URLs automatically expire 24 hours after they are generated, providing an additional layer of security.

Simulation Testing Now Live for All Agents

We’ve launched Simulation Testing for both single and multi-prompt agents!

Now, instead of manually typing inputs, you can write a user prompt to quickly simulate a full conversation.

We’ve also added Batch Simulation Testing, so you can run multiple test cases at once with ease.

More System Default Dynamic Variables

More system default dynamic variables, currently support:

- {{current_time}}: current time in America/Los_Angeles (Monday, March 24, 2025 at 02:53:01 PM PDT)

- {{current_time_[timezone]}}: current time in given timezone

- for example: current_time_Australia/Sydney, current_time_America/New_York

- {{call_type}}: web_call or phone_call

For Phone calls only:

- {{direction}}: inbound or outbound

- {{user_number}}: user phone number (inbound: from_number, outbound: to_number)

- {{agent_number}}: agent phone number (inbound: to_number, outbound: from_number)

Default Value for Dynamic Variables

Add a default value for dynamic variables across all endpoints if they are not provided.

Inbound Call Webhook

If you want to use different agents under the same inbound phone number, such as setup a daytime agent and a afterhour agent, or route to different agent based on the incoming phone number.

This is now possible with the Phone Inbound Webhook.

Better Accuracy? Try GPT-4o for Post-Call Analysis

We’ve added more LLM options for post-call analysis.

If you find the default analysis isn’t accurate, feel free to switch to GPT-4o.

Once selected, GPT-4o will be charged at $0.017 per session.

If you choose GPT-4o Mini, post-call analysis remains free.

Organize Your Agents with Folders

To better manage demo and production agents, you can now organize them using folders.

If you prefer not to use this feature, simply collapse the folder view.

Performance Analytics (Mixpanel for AI Voice Agents)

Now you can use our analytics dashboard to monitor the performance of Voice AI Agents:

• Monitor key metrics like call success rate, transfer rate, call counts, and latency variations...etc

• Compare A/B test results across different agents.

• Create custom charts tailored to your needs.

• Easily dive deep into programmatic calls for better troubleshooting.

This feature will be available for free starting early next week!

Analytics Dashboard | Retell AI Feature

Conversational Flow Testing Suite

We’ve released an advanced testing suite with a bunch of exciting features:

1️⃣ Debug AI responses and regenerate them as needed.

2️⃣ Simulation testing without manually typing input.

3️⃣ Batch simulation testing, allowing you to run multiple test cases at once.

We also started charging for the Test LLM feature on March, 1st.

- GPT 4o mini: $0.002 per message

- GPT 4o: $0.017 per message

- Claude 3.5 haiku: $0.006 per message

- Claude 3.5 sonnet: $0.021 per message

Coming Soon: Support for Single Prompt and Multi-Prompt testing.

CF Testing Suite | Retell AI Feature

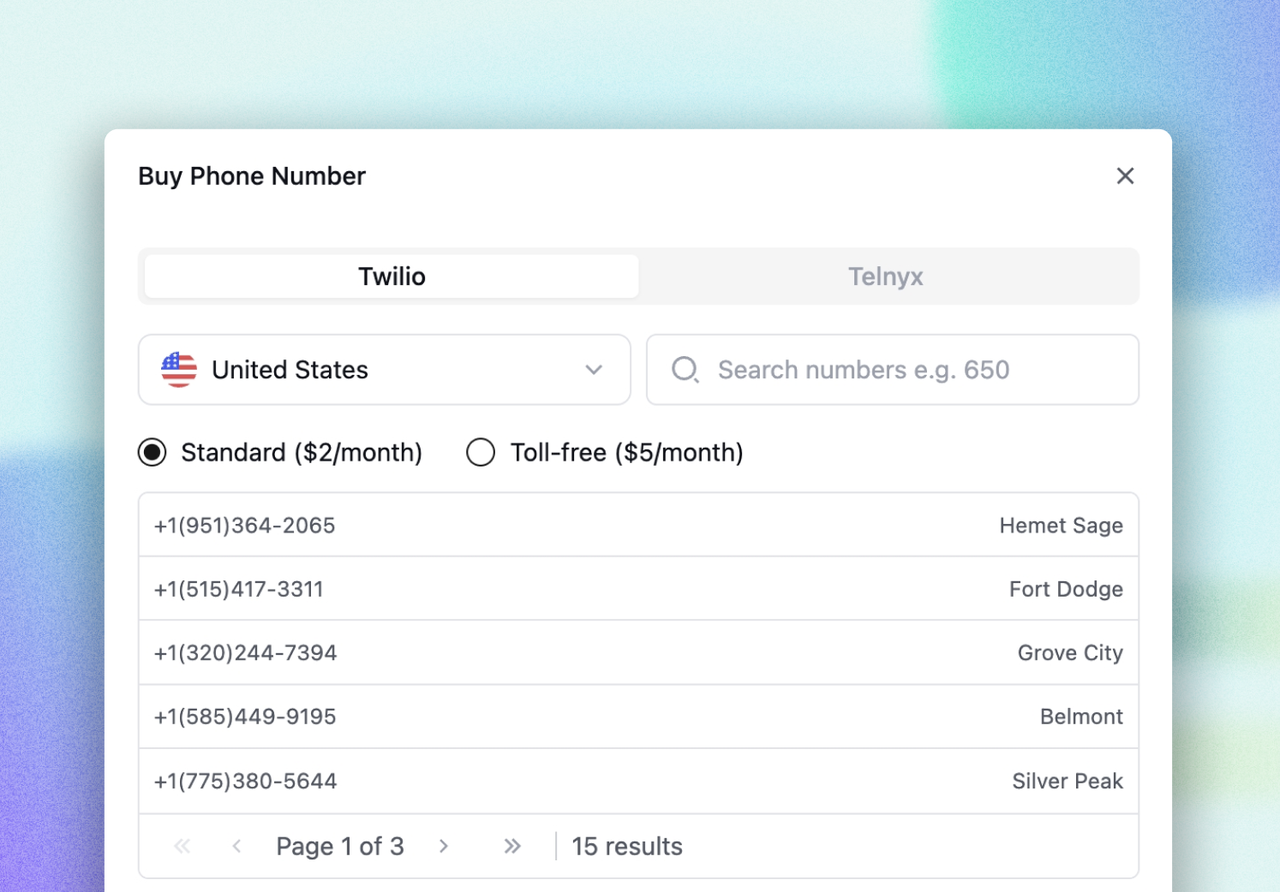

Telnyx Integration

You can now purchase Telnyx phone numbers directly on our platform.

Due to recent restrictions on Retell’s Twilio numbers, we’ve implemented a manual fallback. If you need a specific local number, you can purchase a Telnyx number for seamless outbound calling.

Import/Export Agents

You can now export and import any agent to your different organizations.

Export Call History Data

Export the complete call history using the selected filters and column settings, mirroring the call history table.

Bug fixes and improvements

1. New Voices for OpenAI Realtime: openai-Ash, openai-Coral, openai-Sage

2. LLM and TTS Fallback for individual calls

- Now if the provider is up, but two consecutive failed utterance will trigger a fallback within individual calls.

3. LLM deprecation & upgrade:

- claude 3 haiku will be deprecated, and all agents using that will be upgraded to claude 3.5 haiku;

- claude 3.5 sonnet will soon be upgraded to claude 3.7 sonnet

V1 API Deprecation Final Notice

We will deprecate the V1 APIs and certain deprecated fields in webhooks on February 5, 2025.

Below is a summary of what will be deprecated:

- V1 APIs and deprecated fields inside APIs

- The "data" field inside webhooks

- The old Retell legacy dashboard

To avoid disruptions, please complete your migration before the deadline.

See Docs

Listen to User Keypad Input (DTMF Capture)

AI phone agents can sometimes struggle with capturing long numbers accurately due to background noise or unclear speech. Our new Listen to User Keypad Input feature allows callers to enter numbers using their keypad, improving accuracy.

How It Works:

- Set your AI agent to prompt users to enter digits (e.g., card number, phone number).

- The system captures input directly from the keypad—eliminating transcription errors.

- Use post-call analysis to store and process the data as needed.

Listen to user keypad input | Capture DTMF input from userSee Docs

Dynamic Routing

Need to route calls based on different customer requests? Try Dynamic Routing in call transfers.

Example:

- If the caller wants support, transfer to +1 (925) 222-2222

- If the caller wants sales, transfer to +1 (925) 333-3333

Set up call transfer logic to automatically direct calls to the right department.

API New Features

- Ringtone Duration

- Customize how long the phone rings before a call is answered.

- Batch Call API

A new API for batch calls is now available.

- Telephony Identifier

Calls now include a telephony identifier (if available) for tracking purposes.

Delete Calls & Remove Sensitive Data

For compliance reasons, you can now delete call records completely or removing only recording and call transcription.

User Questionnaire

.avif)

To better understand our customers, we’ve introduced a new questionnaire for all users, including existing ones.

Please take a minute to fill it out—it will be available on our dashboard today.

Conversational Flow

We’ve launched the beta version of our Conversation Flow!

This new tool provides much finer control over conversation flows compared to single or multi-prompt agents, unlocking the ability to handle more complex scenarios with ease.

Key Features:

- Dynamic LLM Selection: Change the LLM for each individual node to tailor responses as needed.

- Precise Response Control: Fine-tune the exact words spoken during and after each function execution.

- Enhanced Node and Transition Definitions: Define edges and transitions with greater accuracy to create seamless interactions.

Highlights:

- Usage-Based Pricing: Call pricing is calculated based on the number of seconds spent within each node, providing transparent cost breakdowns.

- User-Friendly Interface: Replace prompt engineering with an intuitive, graphical input system for building agents.

- Minimized Hallucination Risks: Built-in ultra-guidance mechanisms to ensure accurate and reliable responses.

Get started with a pre-built template today and share your feedback with us!

Don’t Miss Our Next Bot Builders Session!

We received fantastic feedback from the last session, and we’re excited to bring even more value this time. If it’s not already on your calendar, make sure to save the date!

What to expect:

1️⃣ Live troubleshooting for Retell voice bots

2️⃣ Integration help for your projects

3️⃣ Best practices and advanced use cases

4️⃣ Networking with fellow bot builders

5️⃣ Sharing successful case studies

🕒 Time: Friday, 2 PM Pacific Time

📍 Location: Our Discord Channel: https://discord.com/invite/DTzvrQfncB

Other Improvements

1. Ringtone when having warm transfer.

• A ringtone is now available during warm transfers for a smoother experience.

Note: For cold transfers with the option to show the transferee’s number, there is currently no ringtone. This issue will be resolved within 1 week.

2. DTMF from user now can show in transcript

• User-entered DTMF inputs are now captured and included in the call transcript for improved usability.

1. Pricing Adjustment and New Model

We’re excited to announce the following pricing updates:

1. Significant Price Reduction for GPT-4o Realtime: The price has been reduced from $1.50/min to $0.50/min, making it more accessible for your needs.

2. New Model Introduction: We’ve launched GPT-4o Mini Realtime, available at an affordable rate of $0.125/min.

These changes took effect on December 19th.

Additionally, we’ve updated our telephony pricing to better reflect Twilio’s actual costs. The previous price of $0.01 per minute will be adjusted to $0.015 per minute, effective January.

Thank you for your continued support as we make these updates to enhance our offerings and maintain service quality.

2. Elevenlabs Flash v2.5

We’ve added ElevenLabs's newest model: Flash v2.5 & Flash v2.

You can switch to this model when you hope to have blazing fast latency.

The differences between the model:

The Flash v2.5 is multilingual. faster but medium quality.

The Flash v2 is English only. faster but medium quality.

The Turbo V2 is high quality with medium fast.

3. New TTS Provider: PlayHT

We’ve integrated a new voice provider: PlayHT!

Some Retell voices now have versions available in both ElevenLabs and PlayHT.

You can seamlessly set one as a fallback voice for the other. Enjoy a reliable voice experiences!

Pricing:

Voice engine - Playht $0.08/min

4. Pause Before Speaking

We’ve introduced a feature that adds a pause before the AI initiates speaking during a call. This resolves the issue where the AI starts speaking before the user has had a chance to bring the phone to their ear.

Feel free to try it out if you encounter similar situations.

5. Knowledge Base Retrieval Visibility

You can now view Knowledge Base retrieval trunks directly within the Test LLM feature.

This update provides greater visibility into how the Knowledge Base is being used.

Easily check if questions are retrieving the correct information and make adjustments as needed.

6. Other Improvements

- Knowledge Base Edit Features

- Metadata and dynamic variables in history tab

1. Batch Calling

Batch Calling is now live! This feature allows you to make multiple calls simultaneously by simply uploading an Excel sheet.

Here’s how it works:

- Send Now: Dispatch all calls immediately.

- Scheduled Time: Send out calls at a scheduled time.

Batch Calling queues your calls without hitting concurrency limits, ensuring a seamless experience.

Pricing: $0.005 per dial (20k calls for $100)

2. New Docs

We’ve completely overhauled our documentation to make it more user-friendly and comprehensive. We’ll continue updating it regularly based on your needs.

Have suggestions? Join us on Retell Discord and share your thoughts.

Want to find the answer more easily, feel free to join Retell Discord and ask questions in #AI Evy Channel.

3. Expanded Latency Metrics

We’ve added TTS (Text-to-Speech) latency details in call history and the Get-call API.

If you notice higher-than-usual TTS latency, switch to another TTS provider directly. (Please note that older latency fields are now deprecated.)

4. Bug Fixes and Improvements

- Resolved real-time API recording pitch issues.

- Enhanced functionality for calling multiple functions at once.

- Updated Knowledge Base API SDK code.

- Drastically reduced voice speed added latency (from ~100ms to ~5ms).

1. Branded Call

We’ve added the Branded Call feature!

Now, you can enable branded call functionality on each of your phone numbers. It’s a great way to build trust with your outbound calls and significantly improve conversion rates.

Once activated, the recipient will see your business name when you call.

2. Advanced Filters

You can now monitor your call records more effectively with these powerful new filters!

For examples:

- Spot high-latency calls.

- Check long-duration calls.

- Listen to unsuccessful calls.

- Search for calls from specific customers.

- Review all transferred calls.

- Use your custom post-call analysis filters.

3. Usage Dashboard

A detailed dashboard now shows your daily call costs and costs by provider.

Easily track your spending at a glance.

4. Purchase Concurrency

You can now purchase them directly on the dashboard.

Additionally, you can see your live concurrent call usage in the bottom-left corner of the dashboard.

5. Knowledge Base KPI

You can now access the Knowledge Base via API.

6. Structured Output

If you are using OpenAI’s LLM, we’ve added a Structured Output setting.

When enabled, it ensures responses follow your provided JSON Schema.

Note: This feature may increase the time required to save or update functions.

7. Claude 3.5 Haiku LLM

We’ve integrated Claude 3.5 Haiku with a pricing of $0.02/min.

Others:

- API Update: llm_websocket_url is now deprecated; use the new response_engine field.

- Live Transcription: Available on WebCall.

- API Key Rotation: Enhanced security for API keys.

1. Knowledge Base

You can now equip your Voice AI agents with your company’s knowledge in three simple ways:

- Scrape from Webpages: Upload your website’s sitemaps

- Upload Files: We support all document formats.

- Manually Add Text: You can also copy and paste the text.

For the "Scrape from Webpages" method, you can select auto-sync every 24 hours or manually sync anytime. No more manual updates!

Pricing for Knowledge Base:

- You will be charged an additional $0.005 per minute for using the Knowledge Base.

- Every workspace includes 10 free knowledge bases. Additional knowledge bases will be charged at $8/month, billed at the end of the month.

2. Verified Phone Numbers

A must-have for outbound campaigns.

If your calls are being marked as spam or blocked by carriers, this verification process will prevent that from happening.

Simply submit your business profile, and after review, if your business is legitimate, you're good to go!

3. OpenAI Realtime API Integration

We’ve added the OpenAI Realtime API to our platform. The average latency is 600-1000ms, but pricing is currently $1.5/minute. We expect the pricing will go down soon.

4. Display Transferee’s Number

If you're using the call transfer feature and want the next agent to receive the caller’s number (instead of the Retell number), adjust the settings.

5. Workspace

We’ve added the Workspace feature.

• For companies, you can now invite your teammates.

• For agencies, you can now create different organizations for your clients.

Others:

- Improved Webhook Verification for python: https://github.com/RetellAI/retell-custom-llm-python-demo/commit/131ae64f86e38debb5fdc87168cb7240649e385f

https://docs.retellai.com/features/webhook#sample-code - Dark Mode.

- Pricing & Latency details.

1. Dashboard Overhaul

We’ve completed a full dashboard overhaul.

- A more user-friendly and intuitive agent builder.

- Added more functionality to the history tab.

- Overhauled the billing portal.

- A cleaner and more consistent UI.

2. Warm Transfer

We’ve added a warm transfer feature.

If you need to provide background information and hand off the call to the next agent, this feature allows you to set up a prompt or static message for smooth transitions.

3. Disable Transcript Formatting

We’ve added a toggle to disable transcript formatting. This can help resolve the ASR (Automatic Speech Recognition) errors we recently discovered:

- Phone numbers were being misinterpreted as timestamps.

- Double numbers were not being accurately captured.

If you encounter issues related to number transcription, try out this toggle.

4. Cal.com Custom Fields

If you’ve added custom fields in Cal.com, you can now use them in Retell.

When using Cal.com functions, you can instruct the agent to collect specific information, and it will automatically display the collected data in the booking event.

Bug fixes and Improvements

- Boosted keywords

- Custom function timeout

- Maintaining Consistent Voice Tonality

1. More Languages

We’ve now supported more languages:

- zh-CN (Chinese - China)

- ru-RU (Russian - Russia)

- it-IT (Italian - Italy)

- ko-KR(Korean-Korea)

- nl-NL (Dutch - Netherlands)

- pl-PL (Polish - Poland)

- tr-TR (Turkish - Turkey)

- vi-VN (Vietnamese - Vietnam)

Simply change the language in the settings panel on the agent creation page.

2. Max Call Duration

You can now set the maximum duration for calls in minutes to prevent spam.

3. Extended Voicemail Detection

You can set the duration for detecting voicemail. In some B2B use cases, there are welcome messages before going to voicemail. Setting a longer voicemail detection time can solve this issue.

4. LLM Temperature

You can adjust the LLM Temperature to get more varied results. The default setting is more deterministic and provides better function call results.

5. Agent Voice Volume

You can now control the volume of the agent’s voice.

Important Notification

- We will fully stop Audio Websocket and V1 call APIs at 12/31/2024.

Bug fixes and Improvements

- DTMF issue is now fixed.

- Failed inbound calls will now have an entry in history.

- Addressed inaudible speech.

- Added retry for inbound dynamic variable webhook.

- Added timeout for Retell webhook.

- Fixed a bug where custom voice was accidentally overwritten.

- Improved voicemail detection performance.

Community Videos

1. DTMF (Navigate IVR)

Guide the voice agent through IVR systems with button presses (e.g., “Press 1 to reach support”).

2. Voicemail Handling

- You could set up the voicemail when calls reach the voicemail.

- Dashboard only, not available for API.

3. SIP Trunking Integration

You can now integrate Retell AI with your telephony providers, using your own phone numbers (e.g., Twilio, Vonage). This works with both Retell LLM and Custom LLM.

Integration options:

- Elastic SIP trunking

- Dial to SIP Endpoint

4. Multilingual Agent (English & Spanish)

You could make a multilingual agent who could speak English and Spanish at the same time.

5. Pronunciation

You can also control how certain words are pronounced. This is useful when you want to make sure certain uncommon words are pronounced correctly.

6. Voice model selector

We’ve added new settings for voice model selection:

- Turbo v2.5: Fast multilingual model

- Turbo v2: Fast English-only model with pronunciation tag support

- Multilingual v2: Rich emotional expression with a nice accent.

1. Audio Infrastructure Update

We have upgraded our audio infrastructure to WebRTC, moving away from the original websocket-based system. This change ensures better scalability and reliability:

- Web Calls: All web calls are now on WebRTC.

- Phone Calls: Migration to WebRTC is in progress, pending resolution of some SIP blockers.

2. Call API V2

We've introduced the updates in our Call API V2, which now separates phone call and web call objects and includes a few field and API changes:

- API Compatibility: Old APIs will be supported until at least 9/1, with the possibility of extended support.

- Migration Guide: For details on transitioning, please visit our migration document.

- Backend node and Python SDK updated

- New frontend client SDK for web call users: https://docs.retellai.com/make-calls/web-call

3. Concurrency Enhancements

- Default Limits: The default concurrency limit for all users has been increased to 20.

- Concurrency API: A new API to check your current concurrency and limit is now available here.

4. Seperation inbound and outbound

- Agent Separation: Our APIs now support separate inbound and outbound agents, with the option to disable either as needed.

- Nickname Field: Easily find specific numbers with the addition of a nickname field for better organization.

5. Bug Fix and Reliability Improvement

- Enhanced all modules with a smarter retry and failover mechanism.

- Resolved issues with audio choppiness and looping.

- Corrected the display of function call results in the LLM playground.

- Addressed the scrolling issue in the history tab.

6. Usage Limits

In response to abuse and misuse of our platform, we added some usage limits accordingly:

- Scam Detection: Implemented to safeguard users.

- Call Length Limit: Maximum of 1 hour.

- Token Length Limit: Maximum of 8192 tokens for Retell LLM. For multi-state prompts, this includes the longest state plus the general prompt.

- Please contact us if you need exceptions.

1. SOC 2 Type 1 Certification

We've obtained the Vanta SOC 2 Type 1 certification and are currently awaiting the SOC 2 Type 2 certification.

2. Debugging mode

Click on "Test LLM" to enter debugging mode. It works with both single prompts and stateful multi-prompt agents. Now, you can test the LLM without speaking. You can create, store, and edit the conversation.

Pro tip:

For multi-states prompt agent, you can change the starting point to the middle state and test from there.

3. TTS Fallback

Your stability is our top priority. We've added the capability to specify a fallback for TTS. In case of an outage with one provider, your agent can use another voice from a different provider.

4. GPT-4o and pricing

The OpenAI GPT-4o LLM is now available on Retell. The voice interface API has not been released yet, but we plan to integrate it as soon as it becomes available. Stay tuned!

The pricing for GPT-4o is $0.10 per minute (optional).

5. Add Pronunciation Input

You can now guide the model to pronounce a word, name, or phrase in a specific way. For example: "word": "actually", "alphabet": "ipa", "phoneme": "ˈæktʃuəli".

This feature is currently available only via the API but will soon be added to the dashboard.

6. Normalize for speech option

Normalize the some part of text (number, currency, date, etc) to spoken to its spoken form for more consistent speech synthesis.

7. End call after user stay silent

Now you could set if users stay silent for a period after agent speech, then end the call.

The minimum value allowed is 10,000 ms (10 s). By default, this is set to 600000 (10 min).

8. Miscellaneous Updates

- Voice Lists API: You can now get available voices via API. API References

- Ambient Sound: New ambient sound for the call center and ambient sound volume control.

- Asterisks Fix: We noticed that OpenAI models recently started generating asterisks, causing some problems. We have applied a fix to stop this.

- SDK Updated

Article

Retell AI lets companies build ‘voice agents’ to answer phone calls

Techcrunch

1. Enhanced Call Monitoring

Call Analysis: We've introduced metrics like Call Completion Status, Task Completion Status, User Sentiment, Average End-to-End Latency, and Network Latency for comprehensive monitoring. You can access these directly on the dashboard or through API.

Disconnection Reason Tracking: Get insights into call issues with the addition of "Disconnection Reason" in the dashboard and "get-call" object. For more details, refer to our Error Code Table.

Function Call Tracking: Transcripts now include function call results, offering a seamless view of when and what outcomes were triggered. Available in the dashboard and get-call API. For custom LLM users, can use tool call invocation event and tool call result event to pass function calling results to us, so that you can utilize the weaved transcript and can utilize dashboard to view when your function is triggered.

2. New Features

Reminder Settings: You can now configure reminder settings to define the duration of silence before an agent follows up with a response. Learn more.

Backchanneling: Backchannel is the ability for the agent to make small noises like “uh-huh”, “I see”, etc. during user speech, to improve engagement of the call. You can set whether to enable it, how often it triggers, what words are used. Learn more.

“Read Numbers Slowly”: Optimize the reading of numbers (or anything else) by making sure it is read slowly and clearly. How to Read Slowly.

Metadata Event for Custom LLM: Pass data from your backend to the frontend during a call with the new metadata event. See API reference.

3. Major Upgrade to Python Custom LLM (Important)

Improved async OpenAI performance for better latency and stability. Highly recommended for existing Python Custom LLM users to upgrade to the latest version.

4. Webhook Security

Improved webhook security with the signature "verify" function in the new SDK. Find a code example in the custom LLM demo repositories and in the documentation.

Additionally, the webhook includes a temporary recording for users who opt out of storage; please note that this recording will expire in 10 minutes.

This week’s video

We’ve got a shout out in the latest episode of Y Combinator’s podcast Lightcone.

1. Retell LLM Updates

LLM Model Options: Choose between GPT-3.5-turbo and GPT-4-turbo, with additional models coming soon. Available through both our API and dashboard.

Interruption Sensitivity Slider: Adjust how easily users can interrupt the agent. This feature is now accessible in our API and dashboard.

2. Pricing Updates

We've updated our pricing structure to be clearer and more modular.

Conversation voice engine API

- With OpenAI / Deepgram voices ($0.08/min)

- With Elevenlabs voices ($0.10/min)

LLM Agent

- Retell LLM - GPT 3.5 ($0.02/min )

- Retell LLM - GPT 4.0 ($0.2/min )

- Custom LLM (No charge)

Telephony

- Retell Twilio ($0.01/min )

- Custom Twilio (No charge)

3. Monitoring & Debugging Tools

Dashboard Updates: The history tab now includes a public log, essential for debugging and understanding your agent's current state, tool interactions, and more.

Enhanced API Responses: Our get-call API now provides latency tracking for LLM and websocket roundtrip times.

4. Security Features

Ensure the authenticity of requests with our new IP verification feature. Authorized Retell server IPs are: 13.248.202.14, 3.33.169.178.

5. Other Improvements

Enhancements for Custom LLM Users

- You can now turn off interruption for each response (no_interruption_allowed in doc)

- Ability to let agent interrupt / speak when no response is needed (doc)

- Config event to control whether enable reconnection, and whether to send the call detail at beginning of call

- New ping pong events and reconnection mechanism in LLM websocket: will reconnect the websocket if connection lost, and will also track server roundtrip latency (available in get-call API)

Web Call Frontend Upgrades

- Frontend SDK now contains a lot more events that are helpful for animation

- "audio": real time audio played in the system

- "agentStartTalking", "agentStopTalking": track whether agent is speaking, not applicable when ambient sound is used

- "enable_audio_alignment" option to get audio buffer and text alignment in frontend. Not supported in frontend SDK.

SDK improvement: Our updated SDK maintains backward compatibility, ensuring smooth transitions and consistent performance.

🌟 This week’s Demo: Introducing Retell LLM

1️⃣ Retell LLM (Beta)

Low Latency, Conversational LLM with Reliable Function Calls

Experience lightning-fast voice AI with an average end-to-end latency of just 800ms with our LLM, mirroring the performance featured in the South Bay Dental Office demo on our website. Our LLM has been fine-tuned for conciseness and a conversational tone, making it perfect for voice-based interactions. It is also engineered to reliably initiate function calls.

Single-Prompt vs. Stateful Multi-Prompt Agents

We provide two options for creating an agent. The Single-Prompt Agent is ideal for straightforward tasks that require a brief input. For scenarios where the agent's prompt is lengthy and the tasks are too complex for a single input to be effective, the Stateful Multi-Prompt Agent is recommended. This approach divides the prompt into various states, each with its own prompt, linked by conditional edges.

User-Friendly UI for Agent Creation and API for Programmatic Agent Creation

Our dashboard allows you to quickly create an LLM agent using prompts and the drag-and-drop functionality for stateful multi-prompt agents. You can seamlessly build, test, and deploy agents into production using our dashboard or achieve the same programmatically via our API.

Pre-defined Tool Calling Abilities such as Call Transfer, Ending Calls, and Appointment Booking

Leverage our pre-defined tool calling capabilities, including ending calls, transferring calls, checking calendar availability (via Cal.com), and booking appointments (via Cal.com), to easily build real-world actions. We also offer support for custom tools for more tailored actions.

Maintaining Continuous Interaction During Actions That Take Longer

To address delays in actions that require more time to complete, you can activate this feature. It enables the agent to maintain a conversation with the user throughout the duration of the function call. This ensures the voice AI agent keeps the interaction smooth and avoids awkward silences, even when function calls take longer.

.gif)

2️⃣ SDK Update v3.4.0 Announcement

Please note, the previous SDK version will be phased out in 60 days. We encourage you to transition to the latest SDK version.

3️⃣ Status Page

Stay informed with system status on our new status page.

4️⃣ Public Log in get-call API

To streamline your troubleshooting process, we've introduced a public log within our get-call API. This new feature aids in quicker issue resolution and smoother integration, detailed further at the link below.

1️⃣ More Affordable Premium Voices

Thanks to recent cost reductions in our premium voice service, we're excited to pass these savings on to our customers. We're pleased to announce a new, lower price for our premium voice service—now just $0.12 per minute, down from $0.17. Enterprise pricing will also see similar reductions (please contact us at founders@retellai.com for more information).

Please note: The adjusted pricing will take effect from March 1st, and billing will be charged at the end of this month.

2️⃣ Customizable Dashboard Settings

Gain more control over your voice output with new dashboard settings.

- Ambient Sound;

- Responsiveness;

- Voice Speed;

- Voice Temperature;

- Backchanneling;

Tailor your voice interactions to suit your precise needs and preferences for a truly personalized experience.

3️⃣ Secure Webhooks for Enhanced Security

Boost your communication security with our new webhook signatures. This feature enables you to confirm that any received webhook genuinely comes from Retell, providing an additional layer of protection.

4️⃣ Launch of Multilingual Support

We're excited to announce the launch of our multilingual version, now supporting German, Spanish, Hindi, Portuguese, and Japanese. Access and set your preferred language through our dashboard.

While this feature is currently available via API, we're working on extending support to our SDKs shortly.

5️⃣ Opt-Out of Transcripts and Recordings Storage

Based on user feedback, we've introduced an opt-out option for storing transcripts and recordings. This feature, available in our API and the Playground, gives you more control over your data and privacy.

Dear Retell Community,

We are excited to share several updates and new features with you. Our goal is to continually improve our offerings to better meet your needs. Here's what's new:

1️⃣ Enterprise Plan and Discounts Now Available

We're excited to announce the availability of our discounted enterprise tiered pricing. For more information on that, please contact our team at founders@retellai.com.

2️⃣ Enhanced Conversation with Lower Latency

We've launched improvements to further reduce latency (by approximately 30%). Try our demo on the website again and experience the magical speed.

3️⃣ New Agent Control Parameters

We've introduced additional control parameters for agents for greater customization and control. Including:

- Responsiveness: Adjust how responsive your agent is to utterances.

- Voice Speed: Control the speech rate of your agent to be faster or slower.

- Boost Keywords: Prioritize specific keywords for speech recognition.

These parameters have been added to our API. Documentation is being updated, and we are also working on incorporating these features into the SDKs. For more details, visit Create Agent API Reference.

4️⃣ New Call Control Parameter: - end_call_after_silence_ms

This parameter enables the automatic termination of calls following a specified duration of user inactivity. It's designed to streamline operations and improve efficiency.

5️⃣ Word-Level Timestamps in Transcripts

To enhance the utility of our transcripts, we are now including word-level timestamps. This feature is pending documentation updates, so stay tuned for more information at Audio WebSocket API Reference.

6️⃣ [Auto-reconnection] Web Call Updates - Client JS SDK 1.3.0

For users utilizing web calls, our latest client JavaScript SDK (version 1.3.0) now supports auto-reconnection of the socket in case of network disconnections. This ensures a more reliable and uninterrupted service.

We are dedicated to providing you with the best possible service and experience.

We welcome your feedback and are here to support you in making the most out of these new features.

Best regards,

Retell AI team 💛

1️⃣ Domain changed

Please note that our domain has changed. Make sure to update your bookmarks and records to stay connected with us seamlessly.

2️⃣ New TTS provider: Deepgram

We've introduced Deepgram as our new TTS provider. Explore it on the Dashboard and discover your favorite one! The price is still $0.10/minute($6/h)

Also, we've added more voice choices from 11labs, ensuring more stable and diverse voice options for your projects.

3️⃣ New Control Parameters: Voice Temperature

Gain control over the stability and variability of your voice output, allowing for more tailored and dynamic audio experiences.

4️⃣ New Agent ability: Back channeling

Enhance interactions with the ability for the agent to backchannel, using phrases like "yeah" and "uh-huh" to express interest and engagement during conversations.

5️⃣ Python Backend Demo Now in FastAPI

By popular demand, our Python backend demo has transitioned to FastAPI. It includes Twilio integration and a simple function calling example, providing a more robust and user-friendly experience.

6️⃣ New Version of Web Frontend SDK

Our updated web frontend SDK makes integration easier and improves performance, allowing you to access live transcripts directly on your web frontend.

7️⃣Improved Performance in Noisy Environments

Our product now offers improved performance even in noisy settings, ensuring your voice interactions remain clear and uninterrupted.

1️⃣ New pricing tier released

Dear Retell Community,

We are thrilled to announce a new and significantly more affordable pricing tier featuring OpenAI's TTS. Effective immediately, you can take advantage of our state-of-the-art voice conversation API with OpenAI TTS at the new rate of $0.10 per minute.

This adjustment reflects our commitment to providing you with exceptional value and enhancing your voice interaction experience.

We believe this new pricing will make our product more accessible and allow you to leverage our technology for a wider range of applications.

2️⃣ SDK updated

We updated our SDK, so update your retell SDK to stay in the loop.

- https://www.npmjs.com/package/retell-sdk

- https://pypi.org/project/retell-sdk/

We added a frontend js SDK to abstract away the details of capturing mic and setting up playback.

- https://www.npmjs.com/package/retell-client-js-sdk

We update our documentation at https://docs.re-tell.ai/guide/intro to help people integrate.

3️⃣ Open-source demo repo

We open sourced the LLM and twilio codes that powers our dashboard as a demo:

Node.js demo:

https://github.com/adam-team/retell-backend-node-demo

Python demo:

GitHub - adam-team/python-backend-demo

We open sourced the web frontend demo:

React demo using SDK :

GitHub - adam-team/retell-frontend-reactjs-demo

React demo using native JS:

API Changes & New Features

Dear Retell Community,

In our quest to deliver a human-level conversation experience, we've made a strategic decision to refocus our efforts on voice conversation quality, while scaling back on certain other nice-to-haves. The current API will be phased out after this Wednesday at 12:00 PM. We warmly invite you to adopt our new API, designed to continue providing you with a magical AI conversation experience long-term.

🌟 Key Changes:

- LLM Open Sourced: Our LLM will no longer be included in the API. Instead, use the "Custom LLM" feature to integrate your own LLM into the conversation pipeline. Our LLM will remain accessible on the dashboard for demo purposes.

- Twilio and Phone Call Features Open Sourced: These features are removed from the API but remain accessible on the dashboard for demo purposes.

- Custom LLM Integration: Our API now exclusively supports the integration of your own LLM via a websocket, requiring a specified websocket URL for agent creation.

- SDK Updates: We're updating our Node.js SDK to align with these changes, with the Python SDK update to follow soon.

🌟 New Features:

- LIVE Transcript Feature: Leverage real-time transcription for more informed LLM responses.

- Open Sourced Repositories: Gain more customizability with our open-sourced LLM voice agent implementation and Twilio and phone call features.

- Reduced Pricing: Enjoy our service at a 15% discount, now priced at $0.17 per minute.

We understand that this transition may require adjustments in your current setup, and we are here to support you through this change. Please feel free to reach out to us for any assistance or further information regarding the new API.

Thank you for your understanding and continued support.

Best regards,

Retell AI Team 💛

1️⃣ New pricing tier released

Dear Retell Community,

We are thrilled to announce a new and significantly more affordable pricing tier featuring OpenAI's TTS. Effective immediately, you can take advantage of our state-of-the-art voice conversation API with OpenAI TTS at the new rate of $0.10 per minute.

This adjustment reflects our commitment to providing you with exceptional value and enhancing your voice interaction experience.

We believe this new pricing will make our product more accessible and allow you to leverage our technology for a wider range of applications.

2️⃣ SDK updated

We updated our SDK, so update your retell SDK to stay in the loop.

- https://www.npmjs.com/package/retell-sdk

- https://pypi.org/project/retell-sdk/

We added a frontend js SDK to abstract away the details of capturing mic and setting up playback.

- https://www.npmjs.com/package/retell-client-js-sdk

We update our documentation at https://docs.re-tell.ai/guide/intro to help people integrate.

3️⃣ Open-source demo repo

We open sourced the LLM and twilio codes that powers our dashboard as a demo:

Node.js demo:

https://github.com/adam-team/retell-backend-node-demo

Python demo:

GitHub - adam-team/python-backend-demo

We open sourced the web frontend demo:

React demo using SDK :

GitHub - adam-team/retell-frontend-reactjs-demo

React demo using native JS:

GitHub - adam-team/retell-frontend-reactjs-native-demo

Thank you for your understanding and continued support.

Best regards,

Retell AI Team 💛

1️⃣ New pricing tier released

Dear Retell Community,

We are thrilled to announce a new and significantly more affordable pricing tier featuring OpenAI's TTS. Effective immediately, you can take advantage of our state-of-the-art voice conversation API with OpenAI TTS at the new rate of $0.10 per minute.

This adjustment reflects our commitment to providing you with exceptional value and enhancing your voice interaction experience.

We believe this new pricing will make our product more accessible and allow you to leverage our technology for a wider range of applications.

2️⃣ SDK updated

We updated our SDK, so update your retell SDK to stay in the loop.

- https://www.npmjs.com/package/retell-sdk

- https://pypi.org/project/retell-sdk/

We added a frontend js SDK to abstract away the details of capturing mic and setting up playback.

- https://www.npmjs.com/package/retell-client-js-sdk

We update our documentation at https://docs.re-tell.ai/guide/intro to help people integrate.

3️⃣ Open-source demo repo

We open sourced the LLM and twilio codes that powers our dashboard as a demo:

Node.js demo:

https://github.com/adam-team/retell-backend-node-demo

Python demo:

GitHub - adam-team/python-backend-demo

We open sourced the web frontend demo:

React demo using SDK :

GitHub - adam-team/retell-frontend-reactjs-demo

React demo using native JS:

GitHub - adam-team/retell-frontend-reactjs-native-demo

Thank you for your understanding and continued support.

Best regards,

Retell AI Team 💛